Week-4 (Tree Data Structures)

CE205 Data Structures¶

Week-4¶

Tree Data Structure Types and Applications (Binary Tree, Tree Traversals, Heaps)¶

Download PDF,DOCX, SLIDE, PPTX

Outline¶

-

Graph Representation Tools

-

Tree Structures and Binary Tree and Traversals (In-Order, Pre-Order, Post-Order)

-

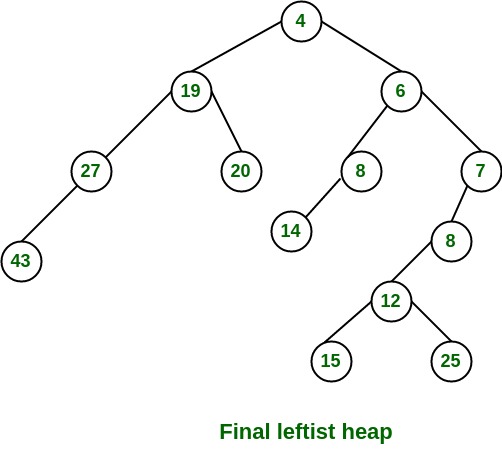

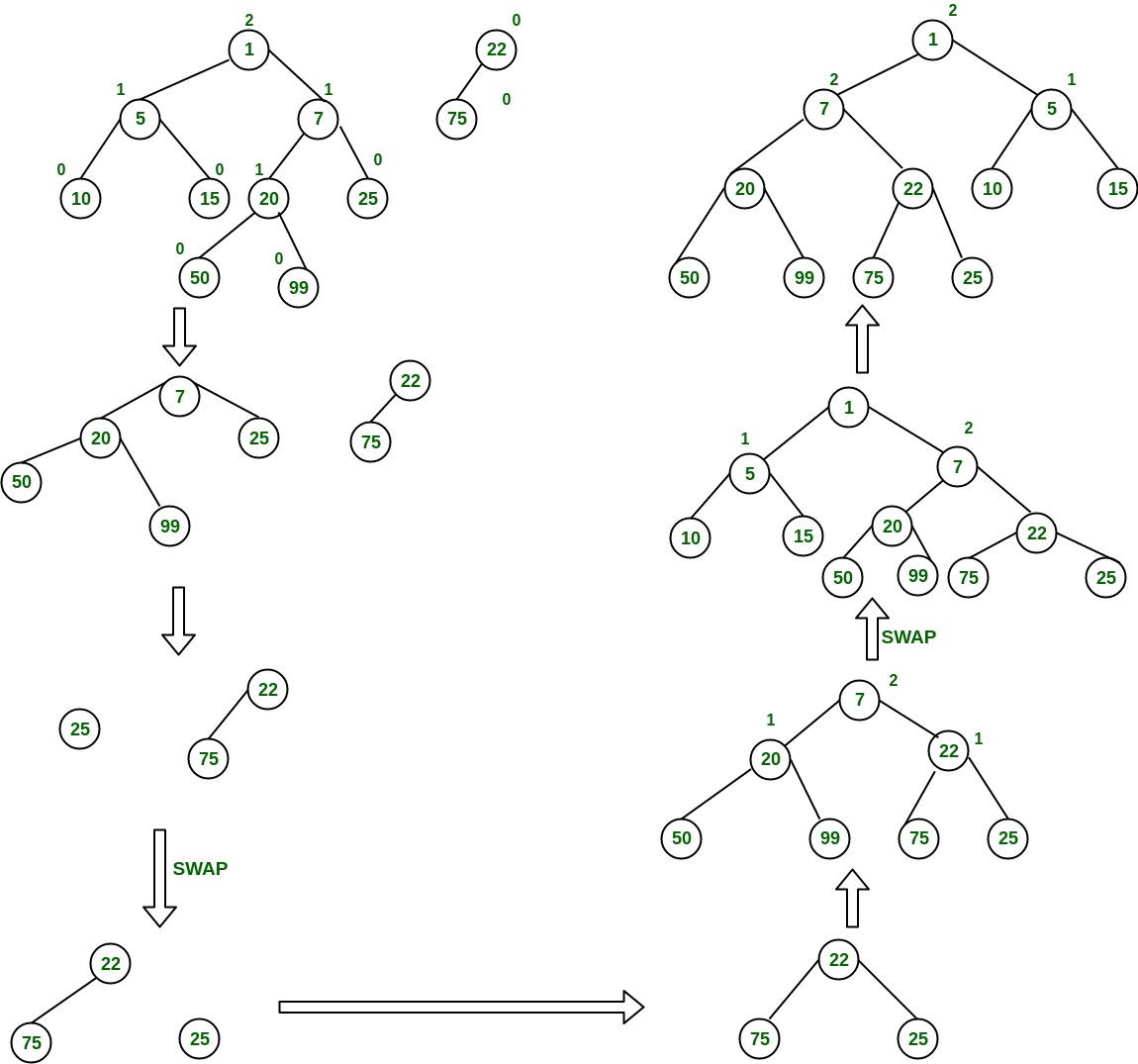

Heaps (Max, Min, Binary , Binomial, Fibonacci, Leftist, K-ary) and Priority Queue

-

Heap Sort

-

Huffman Coding

Graph Representation Tools¶

- Microsoft Automatic Graph Layout

- https://www.microsoft.com/en-us/download/details.aspx?id=52034

- https://github.com/microsoft/automatic-graph-layout

- Graphviz

- https://graphviz.org/resources/

- Plantuml

- https://ucoruh.github.io/ce204-object-oriented-programming/week-5/ce204-week-5/#calling-plantuml-from-java_1

Graph Representation Tools¶

Microsoft Automatic Graph Layout¶

using System;

using System.Collections.Generic;

using System.Windows.Forms;

class ViewerSample {

public static void Main() {

//create a form

System.Windows.Forms.Form form = new System.Windows.Forms.Form();

//create a viewer object

Microsoft.Msagl.GraphViewerGdi.GViewer viewer = new Microsoft.Msagl.GraphViewerGdi.GViewer();

//create a graph object

Microsoft.Msagl.Drawing.Graph graph = new Microsoft.Msagl.Drawing.Graph("graph");

//create the graph content

graph.AddEdge("A", "B");

graph.AddEdge("B", "C");

graph.AddEdge("A", "C").Attr.Color = Microsoft.Msagl.Drawing.Color.Green;

graph.FindNode("A").Attr.FillColor = Microsoft.Msagl.Drawing.Color.Magenta;

graph.FindNode("B").Attr.FillColor = Microsoft.Msagl.Drawing.Color.MistyRose;

Microsoft.Msagl.Drawing.Node c = graph.FindNode("C");

c.Attr.FillColor = Microsoft.Msagl.Drawing.Color.PaleGreen;

c.Attr.Shape = Microsoft.Msagl.Drawing.Shape.Diamond;

//bind the graph to the viewer

viewer.Graph = graph;

//associate the viewer with the form

form.SuspendLayout();

viewer.Dock = System.Windows.Forms.DockStyle.Fill;

form.Controls.Add(viewer);

form.ResumeLayout();

//show the form

form.ShowDialog();

}

}

Graph Representation Tools¶

Microsoft Automatic Graph Layout¶

MSAGL Modules¶

The Core Layout engine (AutomaticGraphLayout.dll) - NuGet package This .NET asssembly contains the core layout functionality. Use this library if you just want MSAGL to perform the layout only and afterwards you will use a separate tool to perform the rendering and visalization.

MSAGL Modules¶

The Drawing module (AutomaticGraphLayout.Drawing.dll) - NuGet package The Definitions of different drawing attributes like colors, line styles, etc. It also contains definitions of a node class, an edge class, and a graph class. By using these classes a user can create a graph object and use it later for layout, and rendering.

MSAGL Modules¶

A WPF control (Microsoft.Msagl.WpfGraphControl.dll) - NuGet package The viewer control lets you visualize graphs and has and some other rendering functionality. Key features: (1) Pan and Zoom (2) Navigate Forward and Backward (3) tooltips and highlighting on graph entities (4) Search for and focus on graph entities.

MSAGL Modules¶

A Windows Forms Viewer control (Microsoft.Msagl.GraphViewerGdi.dll) - NuGet package The viewer control lets you visualize graphs and has and some other rendering functionality. Key features: (1) Pan and Zoom (2) Navigate Forward and Backward (3) tooltips and highlighting on graph entities (4) Search for and focus on graph entities.

Custom MSAGL Demo Project¶

-

Clone and test your self

-

Also you can find another example in this homework

Custom MSAGL Demo Project¶

Custom MSAGL Demo Project¶

Tree Structures and Binary Tree and Traversals (In-Order, Pre-Order, Post-Order)¶

Tree - Terminology¶

- Btech Smart Class

- http://www.btechsmartclass.com/data_structures/tree-terminology.html

Tree - Terminology¶

-

In linear data structure data is organized in sequential order and in non-linear data structure data is organized in random order.

-

A tree is a very popular non-linear data structure used in a wide range of applications. A tree data structure can be defined as follows.

-

Tree is a non-linear data structure which organizes data in hierarchical structure and this is a recursive definition.

-

A tree data structure can also be defined as follows

-

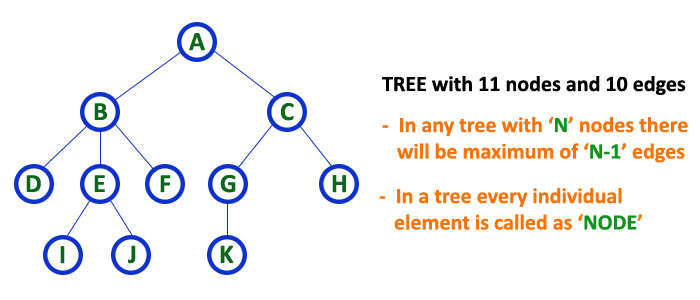

Tree data structure is a collection of data (Node) which is organized in hierarchical structure recursively

-

In tree data structure, every individual element is called as Node.

-

Node in a tree data structure stores the actual data of that particular element and link to next element in hierarchical structure.

-

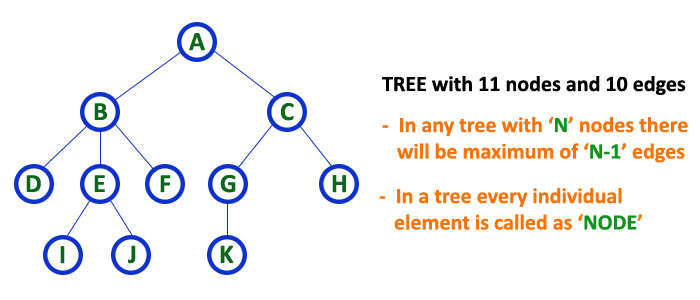

In a tree data structure, if we have N number of nodes then we can have a maximum of N-1 number of links.

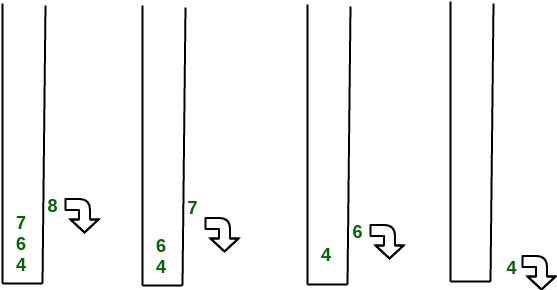

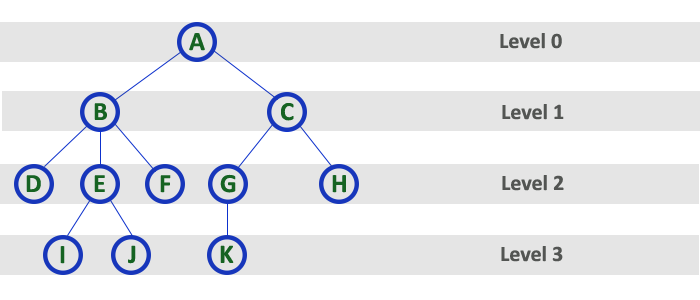

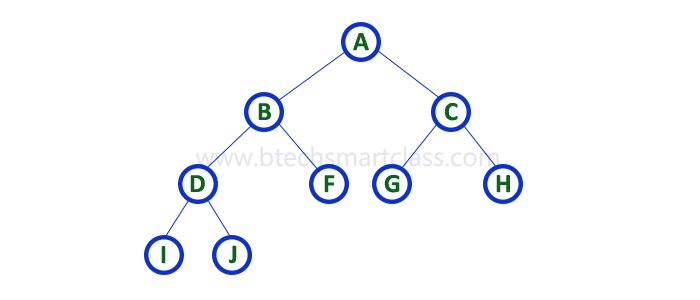

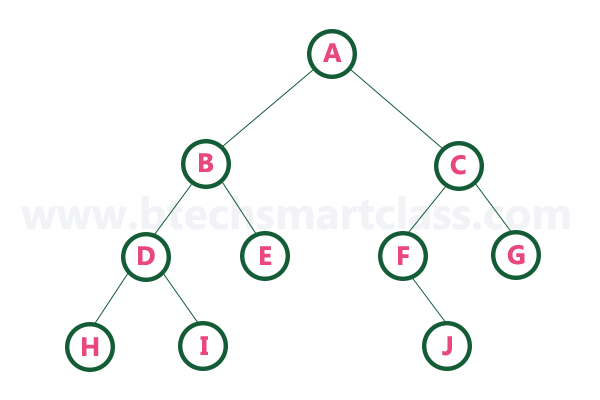

Tree Example¶

Tree Terminology¶

- In a tree data structure, we use the following terminology

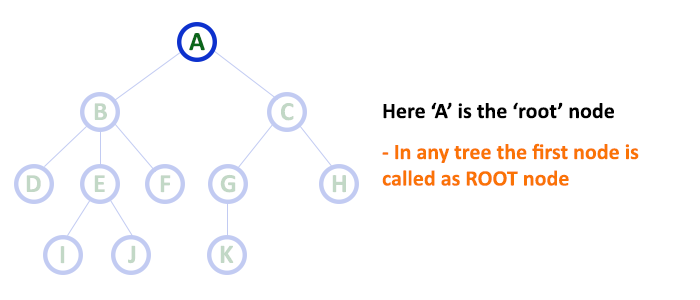

1. Root¶

-

In a tree data structure, the first node is called as Root Node.

-

Every tree must have a root node.

-

We can say that the root node is the origin of the tree data structure.

-

In any tree, there must be only one root node.

-

We never have multiple root nodes in a tree.

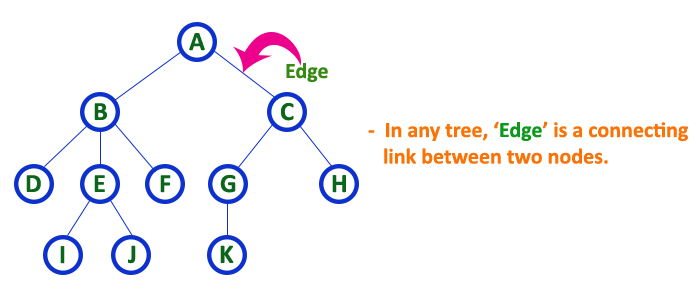

2. Edge¶

- In a tree data structure, the connecting link between any two nodes is called as EDGE. In a tree with 'N' number of nodes there will be a maximum of 'N-1' number of edges.

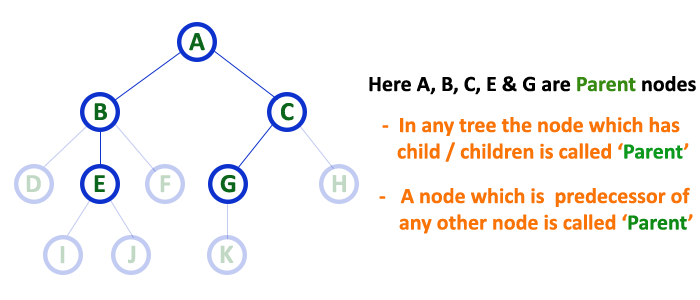

3. Parent¶

-

In a tree data structure, the node which is a predecessor of any node is called as PARENT NODE.

-

In simple words, the node which has a branch from it to any other node is called a parent node.

-

Parent node can also be defined as "The node which has child / children".

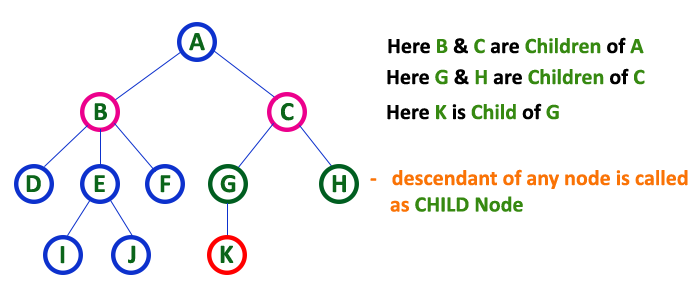

4. Child¶

-

In a tree data structure, the node which is descendant of any node is called as CHILD Node. In simple words, the node which has a link from its parent node is called as child node.

-

In a tree, any parent node can have any number of child nodes. In a tree, all the nodes except root are child nodes.

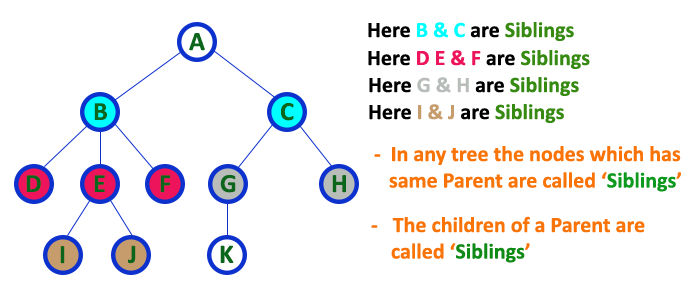

5. Siblings¶

-

In a tree data structure, nodes which belong to same Parent are called as SIBLINGS

-

In simple words, the nodes with the same parent are called Sibling nodes.

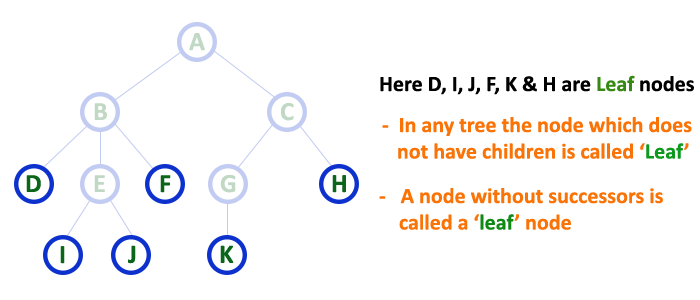

6. Leaf¶

-

In a tree data structure, the node which does not have a child is called as LEAF Node.

-

In simple words, a leaf is a node with no child.

-

In a tree data structure, the leaf nodes are also called as External Nodes. External node is also a node with no child.

-

In a tree, leaf node is also called as 'Terminal' node.

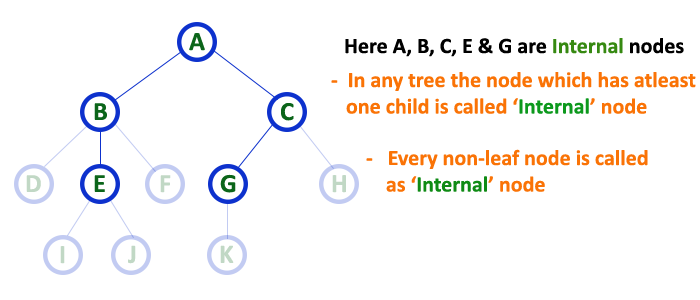

7. Internal Nodes¶

-

In a tree data structure, the node which has atleast one child is called as INTERNAL Node. In simple words, an internal node is a node with atleast one child.

-

In a tree data structure, nodes other than leaf nodes are called as Internal Nodes. The root node is also said to be Internal Node

-

if the tree has more than one node. Internal nodes are also called as 'Non-Terminal' nodes.

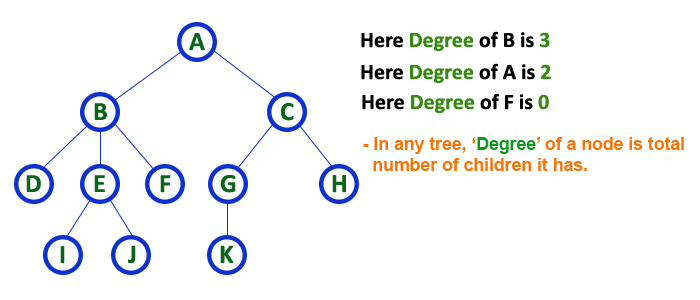

8. Degree¶

-

In a tree data structure, the total number of children of a node is called as DEGREE of that Node. In simple words, the Degree of a node is total number of children it has.

-

The highest degree of a node among all the nodes in a tree is called as 'Degree of Tree'

9. Level¶

-

In a tree data structure, the root node is said to be at Level 0 and the children of root node are at Level 1 and the children of the nodes which are at Level 1 will be at Level 2 and so on

-

In simple words, in a tree each step from top to bottom is called as a Level and the Level count starts with '0' and incremented by one at each level (Step).

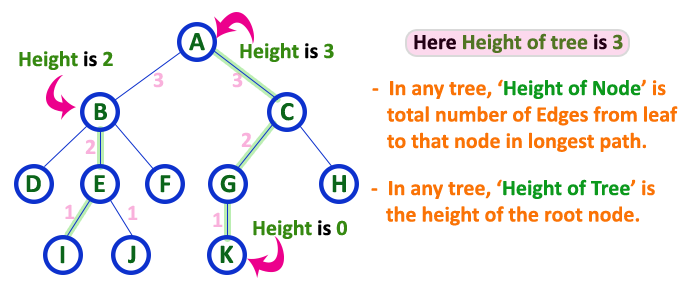

10. Height¶

-

In a tree data structure, the total number of edges from leaf node to a particular node in the longest path is called as HEIGHT of that Node.

-

In a tree, height of the root node is said to be height of the tree.

-

In a tree, height of all leaf nodes is '0'.

-

In a tree data structure, the total number of egdes from root node to a particular node is called as DEPTH of that Node.

-

In a tree, the total number of edges from root node to a leaf node in the longest path is said to be Depth of the tree.

-

In simple words, the highest depth of any leaf node in a tree is said to be depth of that tree.

-

In a tree, depth of the root node is '0'.

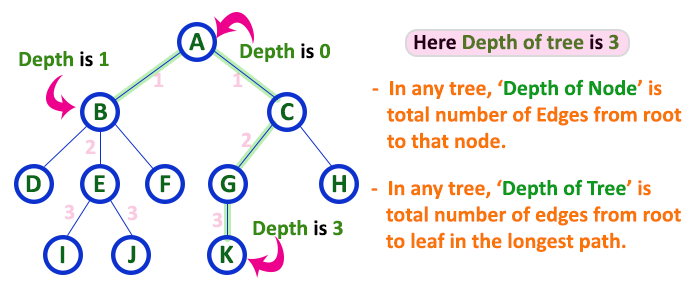

12. Path¶

-

In a tree data structure, the sequence of Nodes and Edges from one node to another node is called as PATH between that two Nodes.

-

Length of a Path is total number of nodes in that path. In below example the path A - B - E - J has length 4.

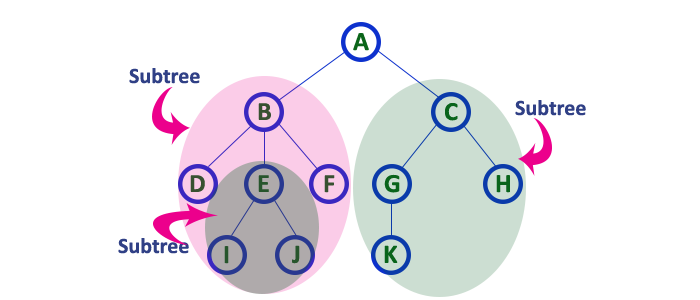

13. Sub Tree¶

-

In a tree data structure, each child from a node forms a subtree recursively.

-

Every child node will form a subtree on its parent node.

Tree Representations¶

- Btech Smart Class

- http://www.btechsmartclass.com/data_structures/tree-representations.html

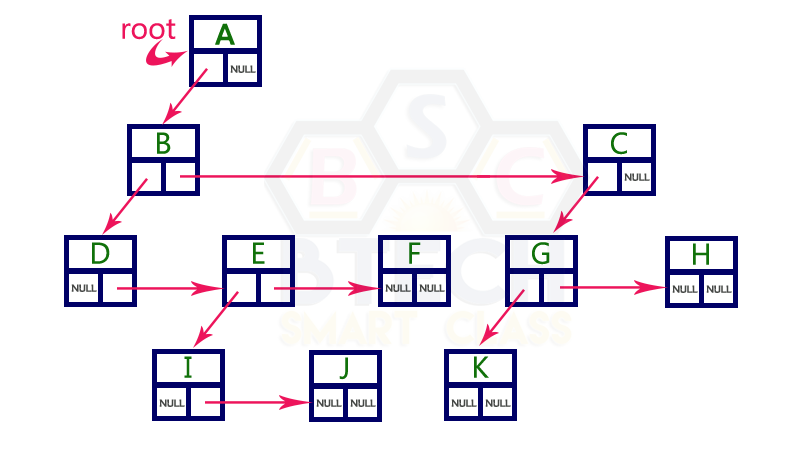

Tree Representations¶

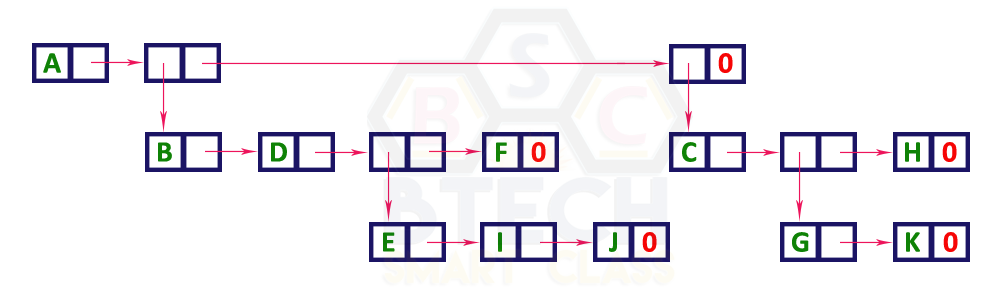

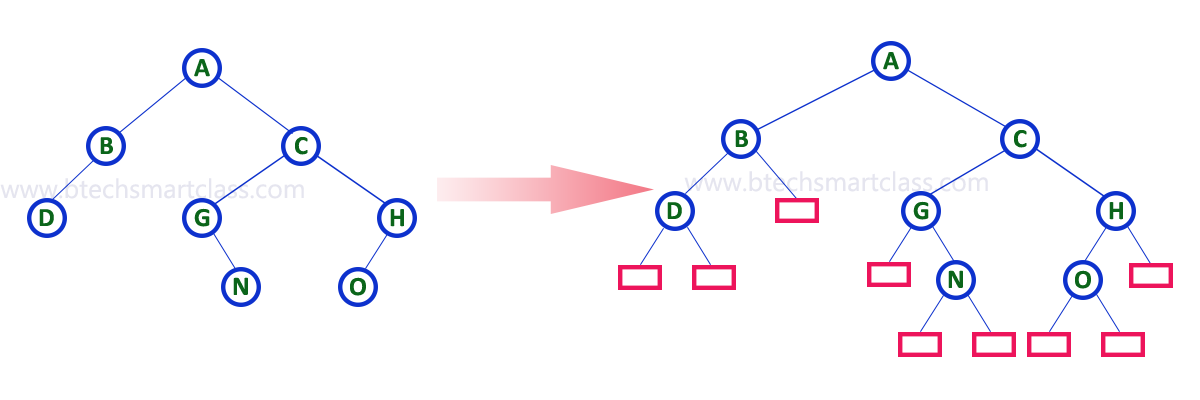

A tree data structure can be represented in two methods. Those methods are as follows.

- List Representation

- Left Child - Right Sibling Representation

Consider the following tree.

1. List Representation¶

-

In this representation, we use two types of nodes one for representing the node with data called 'data node' and another for representing only references called 'reference node'.

-

We start with a 'data node' from the root node in the tree.

-

Then it is linked to an internal node through a 'reference node' which is further linked to any other node directly.

-

This process repeats for all the nodes in the tree.

The above example tree can be represented using List representation as follows...

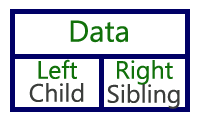

2. Left Child - Right Sibling Representation¶

-

In this representation, we use a list with one type of node which consists of three fields namely Data field, Left child reference field and Right sibling reference field.

-

Data field stores the actual value of a node, left reference field stores the address of the left child and right reference field stores the address of the right sibling node.

- Graphical representation of that node is as follows.

-

In this representation, every node's data field stores the actual value of that node. If that node has left a child, then left reference field stores the address of that left child node otherwise stores NULL.

-

If that node has the right sibling, then right reference field stores the address of right sibling node otherwise stores NULL.

- The above example tree can be represented using Left Child - Right Sibling representation as follows.

Binary Tree Datastructure¶

- Construction and Conversion

- Checking and Printing

- Summation

- Longest Common Ancestor

Lowest Common Ancestor in a Binary Tree - GeeksforGeeks - Btech Smart Class - http://www.btechsmartclass.com/data_structures/binary-tree.html - William Fiset - https://www.youtube.com/watch?v=sD1IoalFomA&ab_channel=WilliamFiset

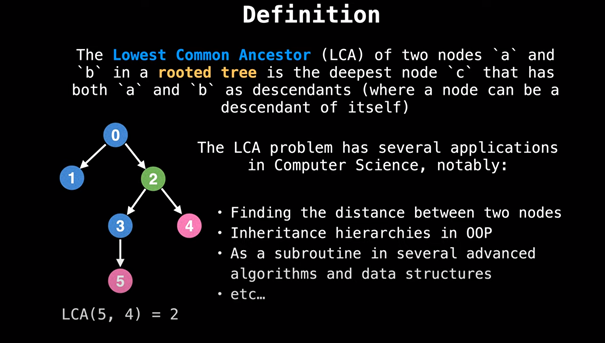

Longet Common Ancestor¶

Longet Common Ancestor¶

Lowest Common Ancestor in a Binary Tree¶

The lowest common ancestor is the lowest node in the tree that has both n1 and n2 as descendants, where n1 and n2 are the nodes for which we wish to find the LCA. Hence, the LCA of a binary tree with nodes n1 and n2 is the shared ancestor of n1 and n2 that is located farthest from the root.

Application of Lowest Common Ancestor(LCA)¶

To determine the distance between pairs of nodes in a tree: the distance from n1 to n2 can be computed as the distance from the root to n1, plus the distance from the root to n2, minus twice the distance from the root to their lowest common ancestor.

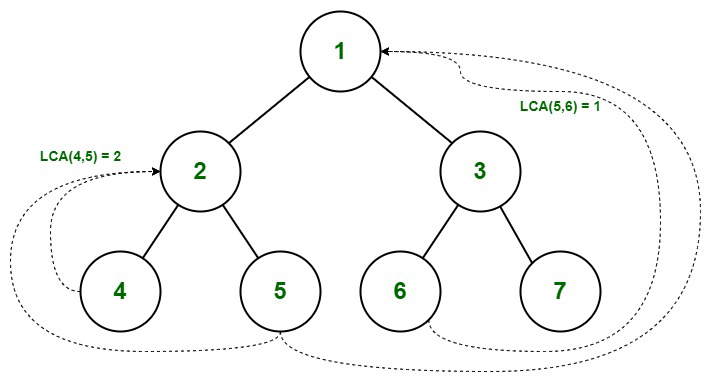

Lowest Common Ancestor in Binary Tree

Illustration:

Find the LCA of 5 and 6

Path from root to 5 = { 1, 2, 5 }

Path from root to 6 = { 1, 3, 6 }

- We start checking from 0 index. As both of the value matches( pathA[0] = pathB[0] ), we move to the next index.

- pathA[1] not equals to pathB[1], there’s a mismatch so we consider the previous value.

- Therefore the LCA of (5,6) = 1

LCA in C++¶

// C++ Program for Lowest Common Ancestor in a Binary Tree

// A O(n) solution to find LCA of two given values n1 and n2

#include <iostream>

#include <vector>

using namespace std;

// A Binary Tree node

struct Node

{

int key;

struct Node *left, *right;

};

// Utility function creates a new binary tree node with given key

Node * newNode(int k)

{

Node *temp = new Node;

temp->key = k;

temp->left = temp->right = NULL;

return temp;

}

// Finds the path from root node to given root of the tree, Stores the

// path in a vector path[], returns true if path exists otherwise false

bool findPath(Node *root, vector<int> &path, int k)

{

// base case

if (root == NULL) return false;

// Store this node in path vector. The node will be removed if

// not in path from root to k

path.push_back(root->key);

// See if the k is same as root's key

if (root->key == k)

return true;

// Check if k is found in left or right sub-tree

if ( (root->left && findPath(root->left, path, k)) ||

(root->right && findPath(root->right, path, k)) )

return true;

// If not present in subtree rooted with root, remove root from

// path[] and return false

path.pop_back();

return false;

}

// Returns LCA if node n1, n2 are present in the given binary tree,

// otherwise return -1

int findLCA(Node *root, int n1, int n2)

{

// to store paths to n1 and n2 from the root

vector<int> path1, path2;

// Find paths from root to n1 and root to n2. If either n1 or n2

// is not present, return -1

if ( !findPath(root, path1, n1) || !findPath(root, path2, n2))

return -1;

/* Compare the paths to get the first different value */

int i;

for (i = 0; i < path1.size() && i < path2.size() ; i++)

if (path1[i] != path2[i])

break;

return path1[i-1];

}

// Driver program to test above functions

int main()

{

// Let us create the Binary Tree shown in above diagram.

Node * root = newNode(1);

root->left = newNode(2);

root->right = newNode(3);

root->left->left = newNode(4);

root->left->right = newNode(5);

root->right->left = newNode(6);

root->right->right = newNode(7);

cout << "LCA(4, 5) = " << findLCA(root, 4, 5);

cout << "\nLCA(4, 6) = " << findLCA(root, 4, 6);

cout << "\nLCA(3, 4) = " << findLCA(root, 3, 4);

cout << "\nLCA(2, 4) = " << findLCA(root, 2, 4);

return 0;

}

LCA in Java¶

// Java Program for Lowest Common Ancestor in a Binary Tree

// A O(n) solution to find LCA of two given values n1 and n2

import java.util.ArrayList;

import java.util.List;

// A Binary Tree node

class Node {

int data;

Node left, right;

Node(int value) {

data = value;

left = right = null;

}

}

public class BT_NoParentPtr_Solution1

{

Node root;

private List<Integer> path1 = new ArrayList<>();

private List<Integer> path2 = new ArrayList<>();

// Finds the path from root node to given root of the tree.

int findLCA(int n1, int n2) {

path1.clear();

path2.clear();

return findLCAInternal(root, n1, n2);

}

private int findLCAInternal(Node root, int n1, int n2) {

if (!findPath(root, n1, path1) || !findPath(root, n2, path2)) {

System.out.println((path1.size() > 0) ? "n1 is present" : "n1 is missing");

System.out.println((path2.size() > 0) ? "n2 is present" : "n2 is missing");

return -1;

}

int i;

for (i = 0; i < path1.size() && i < path2.size(); i++) {

// System.out.println(path1.get(i) + " " + path2.get(i));

if (!path1.get(i).equals(path2.get(i)))

break;

}

return path1.get(i-1);

}

// Finds the path from root node to given root of the tree, Stores the

// path in a vector path[], returns true if path exists otherwise false

private boolean findPath(Node root, int n, List<Integer> path)

{

// base case

if (root == null) {

return false;

}

// Store this node . The node will be removed if

// not in path from root to n.

path.add(root.data);

if (root.data == n) {

return true;

}

if (root.left != null && findPath(root.left, n, path)) {

return true;

}

if (root.right != null && findPath(root.right, n, path)) {

return true;

}

// If not present in subtree rooted with root, remove root from

// path[] and return false

path.remove(path.size()-1);

return false;

}

// Driver code

public static void main(String[] args)

{

BT_NoParentPtr_Solution1 tree = new BT_NoParentPtr_Solution1();

tree.root = new Node(1);

tree.root.left = new Node(2);

tree.root.right = new Node(3);

tree.root.left.left = new Node(4);

tree.root.left.right = new Node(5);

tree.root.right.left = new Node(6);

tree.root.right.right = new Node(7);

System.out.println("LCA(4, 5): " + tree.findLCA(4,5));

System.out.println("LCA(4, 6): " + tree.findLCA(4,6));

System.out.println("LCA(3, 4): " + tree.findLCA(3,4));

System.out.println("LCA(2, 4): " + tree.findLCA(2,4));

}

}

// This code is contributed by Sreenivasulu Rayanki.

LCA in C¶

// C# Program for Lowest Common

// Ancestor in a Binary Tree

// A O(n) solution to find LCA

// of two given values n1 and n2

using System.Collections;

using System;

// A Binary Tree node

class Node

{

public int data;

public Node left, right;

public Node(int value)

{

data = value;

left = right = null;

}

}

public class BT_NoParentPtr_Solution1

{

Node root;

private ArrayList path1 =

new ArrayList();

private ArrayList path2 =

new ArrayList();

// Finds the path from root

// node to given root of the

// tree.

int findLCA(int n1,

int n2)

{

path1.Clear();

path2.Clear();

return findLCAInternal(root,

n1, n2);

}

private int findLCAInternal(Node root,

int n1, int n2)

{

if (!findPath(root, n1, path1) ||

!findPath(root, n2, path2)) {

Console.Write((path1.Count > 0) ?

"n1 is present" :

"n1 is missing");

Console.Write((path2.Count > 0) ?

"n2 is present" :

"n2 is missing");

return -1;

}

int i;

for (i = 0; i < path1.Count &&

i < path2.Count; i++)

{

// System.out.println(path1.get(i)

// + " " + path2.get(i));

if ((int)path1[i] !=

(int)path2[i])

break;

}

return (int)path1[i - 1];

}

// Finds the path from root node

// to given root of the tree,

// Stores the path in a vector

// path[], returns true if path

// exists otherwise false

private bool findPath(Node root,

int n,

ArrayList path)

{

// base case

if (root == null)

{

return false;

}

// Store this node . The node

// will be removed if not in

// path from root to n.

path.Add(root.data);

if (root.data == n)

{

return true;

}

if (root.left != null &&

findPath(root.left,

n, path))

{

return true;

}

if (root.right != null &&

findPath(root.right,

n, path))

{

return true;

}

// If not present in subtree

//rooted with root, remove root

// from path[] and return false

path.RemoveAt(path.Count - 1);

return false;

}

// Driver code

public static void Main(String[] args)

{

BT_NoParentPtr_Solution1 tree =

new BT_NoParentPtr_Solution1();

tree.root = new Node(1);

tree.root.left = new Node(2);

tree.root.right = new Node(3);

tree.root.left.left = new Node(4);

tree.root.left.right = new Node(5);

tree.root.right.left = new Node(6);

tree.root.right.right = new Node(7);

Console.Write("LCA(4, 5): " +

tree.findLCA(4, 5));

Console.Write("\nLCA(4, 6): " +

tree.findLCA(4, 6));

Console.Write("\nLCA(3, 4): " +

tree.findLCA(3, 4));

Console.Write("\nLCA(2, 4): " +

tree.findLCA(2, 4));

}

}

// This code is contributed by Rutvik_56

Output¶

-

Time Complexity: O(n). The tree is traversed twice, and then path arrays are compared.

-

Auxiliary Space: O(n). Extra Space for path1 and path2.

Binary Tree Datastructure¶

-

In a normal tree, every node can have any number of children.

-

A binary tree is a special type of tree data structure in which every node can have a maximum of 2 children.

-

One is known as a left child and the other is known as right child.

-

A tree in which every node can have a maximum of two children is called Binary Tree.

-

In a binary tree, every node can have either 0 children or 1 child or 2 children but not more than 2 children.

Example¶

There are different types of binary trees and they are

1. Strictly Binary Tree (Full Binary Tree / Proper Binary Tree or 2-Tree)¶

-

In a binary tree, every node can have a maximum of two children.

-

But in strictly binary tree, every node should have exactly two children or none. That means every internal node must have exactly two children.

-

A strictly Binary Tree can be defined as follows.

-

A binary tree in which every node has either two or zero number of children is called Strictly Binary Tree

-

Strictly binary tree is also called as Full Binary Tree or Proper Binary Tree or 2-Tree

Example¶

- Strictly binary tree data structure is used to represent mathematical expressions.

Full Binary Tree Theorems¶

Let, i = the number of internal nodes

n = be the total number of nodes

l = number of leaves

λ = number of levels

Full Binary Tree Theorems¶

- The number of leaves is

i + 1. - The total number of nodes is

2i + 1. - The number of internal nodes is

(n – 1) / 2. - The number of leaves is

(n + 1) / 2. - The total number of nodes is

2l – 1. - The number of internal nodes is

l – 1. - The number of leaves is at most

2λ - 1.

Full Binary Tree in C¶

// Checking if a binary tree is a full binary tree in C

#include <stdbool.h>

#include <stdio.h>

#include <stdlib.h>

struct Node {

int item;

struct Node *left, *right;

};

// Creation of new Node

struct Node *createNewNode(char k) {

struct Node *node = (struct Node *)malloc(sizeof(struct Node));

node->item = k;

node->right = node->left = NULL;

return node;

}

bool isFullBinaryTree(struct Node *root) {

// Checking tree emptiness

if (root == NULL)

return true;

// Checking the presence of children

if (root->left == NULL && root->right == NULL)

return true;

if ((root->left) && (root->right))

return (isFullBinaryTree(root->left) && isFullBinaryTree(root->right));

return false;

}

int main() {

struct Node *root = NULL;

root = createNewNode(1);

root->left = createNewNode(2);

root->right = createNewNode(3);

root->left->left = createNewNode(4);

root->left->right = createNewNode(5);

root->left->right->left = createNewNode(6);

root->left->right->right = createNewNode(7);

if (isFullBinaryTree(root))

printf("The tree is a full binary tree\n");

else

printf("The tree is not a full binary tree\n");

}

Full Binary Tree in C++¶

// Checking if a binary tree is a full binary tree in C++

#include <iostream>

using namespace std;

struct Node {

int key;

struct Node *left, *right;

};

// New node creation

struct Node *newNode(char k) {

struct Node *node = (struct Node *)malloc(sizeof(struct Node));

node->key = k;

node->right = node->left = NULL;

return node;

}

bool isFullBinaryTree(struct Node *root) {

// Checking for emptiness

if (root == NULL)

return true;

// Checking for the presence of children

if (root->left == NULL && root->right == NULL)

return true;

if ((root->left) && (root->right))

return (isFullBinaryTree(root->left) && isFullBinaryTree(root->right));

return false;

}

int main() {

struct Node *root = NULL;

root = newNode(1);

root->left = newNode(2);

root->right = newNode(3);

root->left->left = newNode(4);

root->left->right = newNode(5);

root->left->right->left = newNode(6);

root->left->right->right = newNode(7);

if (isFullBinaryTree(root))

cout << "The tree is a full binary tree\n";

else

cout << "The tree is not a full binary tree\n";

}

Full Binary in Java¶

// Checking if a binary tree is a full binary tree in Java

class Node {

int data;

Node leftChild, rightChild;

Node(int item) {

data = item;

leftChild = rightChild = null;

}

}

class BinaryTree {

Node root;

// Check for Full Binary Tree

boolean isFullBinaryTree(Node node) {

// Checking tree emptiness

if (node == null)

return true;

// Checking the children

if (node.leftChild == null && node.rightChild == null)

return true;

if ((node.leftChild != null) && (node.rightChild != null))

return (isFullBinaryTree(node.leftChild) && isFullBinaryTree(node.rightChild));

return false;

}

public static void main(String args[]) {

BinaryTree tree = new BinaryTree();

tree.root = new Node(1);

tree.root.leftChild = new Node(2);

tree.root.rightChild = new Node(3);

tree.root.leftChild.leftChild = new Node(4);

tree.root.leftChild.rightChild = new Node(5);

tree.root.rightChild.leftChild = new Node(6);

tree.root.rightChild.rightChild = new Node(7);

if (tree.isFullBinaryTree(tree.root))

System.out.print("The tree is a full binary tree");

else

System.out.print("The tree is not a full binary tree");

}

}

2. Complete Binary Tree (Perfect Binary Tree)¶

-

In a binary tree, every node can have a maximum of two children.

-

But in strictly binary tree, every node should have exactly two children or none and in complete binary tree all the nodes must have exactly two children and at every level of complete binary tree there must be 2level number of nodes.

-

For example at level 2 there must be 22 = 4 nodes and at level 3 there must be 23 = 8 nodes.

-

A binary tree in which every internal node has exactly two children and all leaf nodes are at same level is called Complete Binary Tree.

-

Complete binary tree is also called as Perfect Binary Tree

Perfect Binary Tree Theorems¶

- A perfect binary tree of height h has

2h + 1 – 1node. - A perfect binary tree with n nodes has height

log(n + 1) – 1 = Θ(ln(n)). - A perfect binary tree of height h has

2hleaf nodes. - The average depth of a node in a perfect binary tree is

Θ(ln(n)).

Perfect Binary Tree in C¶

// Checking if a binary tree is a perfect binary tree in C

#include <stdbool.h>

#include <stdio.h>

#include <stdlib.h>

struct node {

int data;

struct node *left;

struct node *right;

};

// Creating a new node

struct node *newnode(int data) {

struct node *node = (struct node *)malloc(sizeof(struct node));

node->data = data;

node->left = NULL;

node->right = NULL;

return (node);

}

// Calculate the depth

int depth(struct node *node) {

int d = 0;

while (node != NULL) {

d++;

node = node->left;

}

return d;

}

// Check if the tree is perfect

bool is_perfect(struct node *root, int d, int level) {

// Check if the tree is empty

if (root == NULL)

return true;

// Check the presence of children

if (root->left == NULL && root->right == NULL)

return (d == level + 1);

if (root->left == NULL || root->right == NULL)

return false;

return is_perfect(root->left, d, level + 1) &&

is_perfect(root->right, d, level + 1);

}

// Wrapper function

bool is_Perfect(struct node *root) {

int d = depth(root);

return is_perfect(root, d, 0);

}

int main() {

struct node *root = NULL;

root = newnode(1);

root->left = newnode(2);

root->right = newnode(3);

root->left->left = newnode(4);

root->left->right = newnode(5);

root->right->left = newnode(6);

if (is_Perfect(root))

printf("The tree is a perfect binary tree\n");

else

printf("The tree is not a perfect binary tree\n");

}

Perfect Binary Tree in C++¶

// Checking if a binary tree is a perfect binary tree in C++

#include <iostream>

using namespace std;

struct Node {

int key;

struct Node *left, *right;

};

int depth(Node *node) {

int d = 0;

while (node != NULL) {

d++;

node = node->left;

}

return d;

}

bool isPerfectR(struct Node *root, int d, int level = 0) {

if (root == NULL)

return true;

if (root->left == NULL && root->right == NULL)

return (d == level + 1);

if (root->left == NULL || root->right == NULL)

return false;

return isPerfectR(root->left, d, level + 1) &&

isPerfectR(root->right, d, level + 1);

}

bool isPerfect(Node *root) {

int d = depth(root);

return isPerfectR(root, d);

}

struct Node *newNode(int k) {

struct Node *node = new Node;

node->key = k;

node->right = node->left = NULL;

return node;

}

int main() {

struct Node *root = NULL;

root = newNode(1);

root->left = newNode(2);

root->right = newNode(3);

root->left->left = newNode(4);

root->left->right = newNode(5);

root->right->left = newNode(6);

if (isPerfect(root))

cout << "The tree is a perfect binary tree\n";

else

cout << "The tree is not a perfect binary tree\n";

}

Perfect Binary Tree in Java¶

// Checking if a binary tree is a perfect binary tree in Java

class PerfectBinaryTree {

static class Node {

int key;

Node left, right;

}

// Calculate the depth

static int depth(Node node) {

int d = 0;

while (node != null) {

d++;

node = node.left;

}

return d;

}

// Check if the tree is perfect binary tree

static boolean is_perfect(Node root, int d, int level) {

// Check if the tree is empty

if (root == null)

return true;

// If for children

if (root.left == null && root.right == null)

return (d == level + 1);

if (root.left == null || root.right == null)

return false;

return is_perfect(root.left, d, level + 1) && is_perfect(root.right, d, level + 1);

}

// Wrapper function

static boolean is_Perfect(Node root) {

int d = depth(root);

return is_perfect(root, d, 0);

}

// Create a new node

static Node newNode(int k) {

Node node = new Node();

node.key = k;

node.right = null;

node.left = null;

return node;

}

public static void main(String args[]) {

Node root = null;

root = newNode(1);

root.left = newNode(2);

root.right = newNode(3);

root.left.left = newNode(4);

root.left.right = newNode(5);

if (is_Perfect(root) == true)

System.out.println("The tree is a perfect binary tree");

else

System.out.println("The tree is not a perfect binary tree");

}

}

3. Extended Binary Tree¶

-

A binary tree can be converted into Full Binary tree by adding dummy nodes to existing nodes wherever required.

-

The full binary tree obtained by adding dummy nodes to a binary tree is called as Extended Binary Tree.

- In above figure, a normal binary tree is converted into full binary tree by adding dummy nodes (In pink colour).

Complete Binary Tree¶

-

A complete binary tree is a binary tree in which all the levels are completely filled except possibly the lowest one, which is filled from the left.

-

A complete binary tree is just like a full binary tree, but with two major differences

-

All the leaf elements must lean towards the left.

-

The last leaf element might not have a right sibling i.e. a complete binary tree doesn't have to be a full binary tree.

Complete Binary Tree¶

Comparison between full binary tree and complete binary tree¶

Comparison between full binary tree and complete binary tree¶

Comparison between full binary tree and complete binary tree¶

Comparison between full binary tree and complete binary tree¶

How a Complete Binary Tree is Created?¶

- Select the first element of the list to be the root node. (no. of elements on level-I: 1)

Select the first element as root

- Put the second element as a left child of the root node and the third element as the right child. (no. of elements on level-II: 2)

12 as a left child and 9 as a right child

- Put the next two elements as children of the left node of the second level. Again, put the next two elements as children of the right node of the second level (no. of elements on level-III: 4) elements).

- Keep repeating until you reach the last element.

5 as a left child and 6 as a right child

Relationship between array indexes and tree element¶

-

A complete binary tree has an interesting property that we can use to find the children and parents of any node.

-

If the index of any element in the array is i, the element in the index

2i+1will become the left child and element in2i+2index will become the right child. -

Also, the parent of any element at index i is given by the lower bound of

(i-1)/2.

Complete Binary Tree in C¶

// Checking if a binary tree is a complete binary tree in C

#include <stdbool.h>

#include <stdio.h>

#include <stdlib.h>

struct Node {

int key;

struct Node *left, *right;

};

// Node creation

struct Node *newNode(char k) {

struct Node *node = (struct Node *)malloc(sizeof(struct Node));

node->key = k;

node->right = node->left = NULL;

return node;

}

// Count the number of nodes

int countNumNodes(struct Node *root) {

if (root == NULL)

return (0);

return (1 + countNumNodes(root->left) + countNumNodes(root->right));

}

// Check if the tree is a complete binary tree

bool checkComplete(struct Node *root, int index, int numberNodes) {

// Check if the tree is complete

if (root == NULL)

return true;

if (index >= numberNodes)

return false;

return (checkComplete(root->left, 2 * index + 1, numberNodes) && checkComplete(root->right, 2 * index + 2, numberNodes));

}

int main() {

struct Node *root = NULL;

root = newNode(1);

root->left = newNode(2);

root->right = newNode(3);

root->left->left = newNode(4);

root->left->right = newNode(5);

root->right->left = newNode(6);

int node_count = countNumNodes(root);

int index = 0;

if (checkComplete(root, index, node_count))

printf("The tree is a complete binary tree\n");

else

printf("The tree is not a complete binary tree\n");

}

Complete Binary Tree in C++¶

// Checking if a binary tree is a complete binary tree in C++

#include <iostream>

using namespace std;

struct Node {

int key;

struct Node *left, *right;

};

// Create node

struct Node *newNode(char k) {

struct Node *node = (struct Node *)malloc(sizeof(struct Node));

node->key = k;

node->right = node->left = NULL;

return node;

}

// Count the number of nodes

int countNumNodes(struct Node *root) {

if (root == NULL)

return (0);

return (1 + countNumNodes(root->left) + countNumNodes(root->right));

}

// Check if the tree is a complete binary tree

bool checkComplete(struct Node *root, int index, int numberNodes) {

// Check if the tree is empty

if (root == NULL)

return true;

if (index >= numberNodes)

return false;

return (checkComplete(root->left, 2 * index + 1, numberNodes) && checkComplete(root->right, 2 * index + 2, numberNodes));

}

int main() {

struct Node *root = NULL;

root = newNode(1);

root->left = newNode(2);

root->right = newNode(3);

root->left->left = newNode(4);

root->left->right = newNode(5);

root->right->left = newNode(6);

int node_count = countNumNodes(root);

int index = 0;

if (checkComplete(root, index, node_count))

cout << "The tree is a complete binary tree\n";

else

cout << "The tree is not a complete binary tree\n";

}

Complete Binary Tree in Java¶

// Checking if a binary tree is a complete binary tree in Java

// Node creation

class Node {

int data;

Node left, right;

Node(int item) {

data = item;

left = right = null;

}

}

class BinaryTree {

Node root;

// Count the number of nodes

int countNumNodes(Node root) {

if (root == null)

return (0);

return (1 + countNumNodes(root.left) + countNumNodes(root.right));

}

// Check for complete binary tree

boolean checkComplete(Node root, int index, int numberNodes) {

// Check if the tree is empty

if (root == null)

return true;

if (index >= numberNodes)

return false;

return (checkComplete(root.left, 2 * index + 1, numberNodes)

&& checkComplete(root.right, 2 * index + 2, numberNodes));

}

public static void main(String args[]) {

BinaryTree tree = new BinaryTree();

tree.root = new Node(1);

tree.root.left = new Node(2);

tree.root.right = new Node(3);

tree.root.left.right = new Node(5);

tree.root.left.left = new Node(4);

tree.root.right.left = new Node(6);

int node_count = tree.countNumNodes(tree.root);

int index = 0;

if (tree.checkComplete(tree.root, index, node_count))

System.out.println("The tree is a complete binary tree");

else

System.out.println("The tree is not a complete binary tree");

}

}

Binary Tree Representations¶

Binary Tree Representations¶

-

A binary tree data structure is represented using two methods. Those methods are as follows.

-

Array Representation

-

Linked List Representation

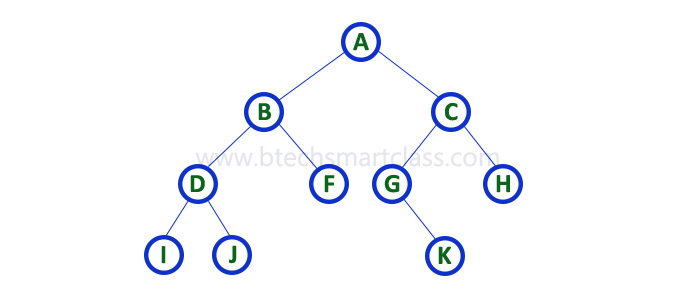

- Consider the following binary tree

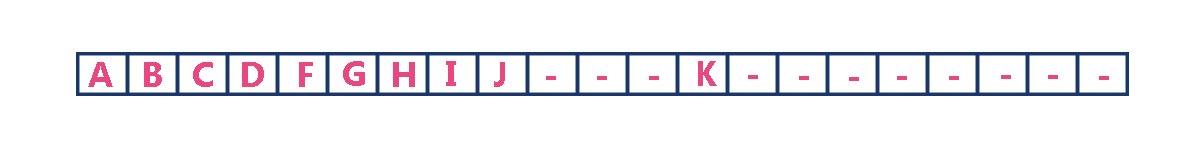

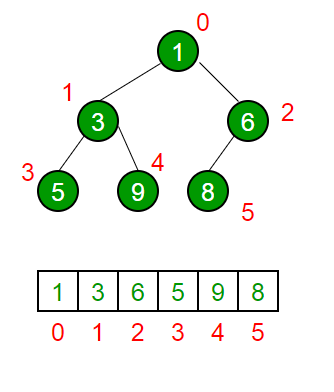

1. Array Representation of Binary Tree¶

-

In array representation of a binary tree, we use one-dimensional array (1-D Array) to represent a binary tree.

-

Consider the above example of a binary tree and it is represented as follows.

- To represent a binary tree of depth 'n' using array representation, we need one dimensional array with a maximum size of 2n + 1.

2. Linked List Representation of Binary Tree¶

-

We use a double linked list to represent a binary tree.

-

In a double linked list, every node consists of three fields.

-

First field for storing left child address, second for storing actual data and third for storing right child address.

-

In this linked list representation, a node has the following structure.

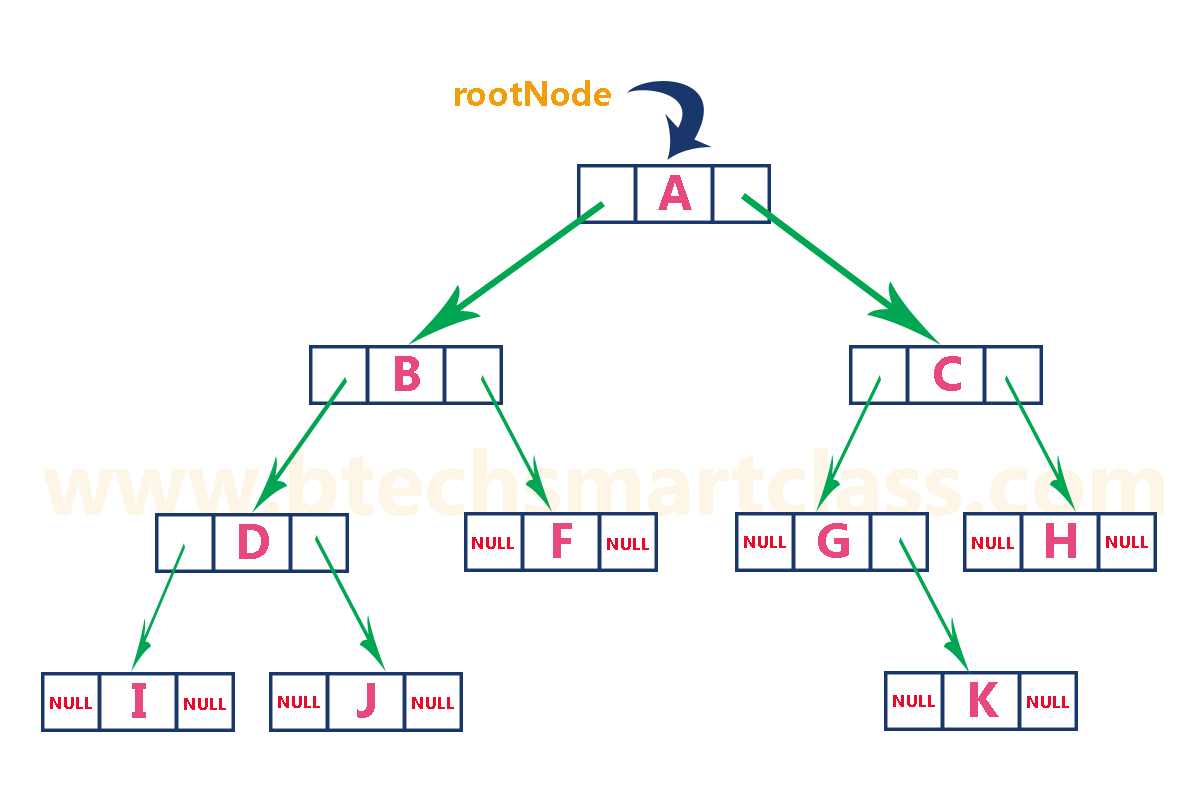

- The above example of the binary tree represented using Linked list representation is shown as follows.

Binary Tree Traversals¶

- Btech Smart Class

- http://www.btechsmartclass.com/data_structures/binary-tree-traversals.html

- In-Order

- Pre-Order

- Post-Order

Binary Tree Traversals¶

-

When we wanted to display a binary tree,

-

we need to follow some order in which all the nodes of that binary tree must be displayed.

-

In any binary tree, displaying order of nodes depends on the traversal method.

-

Displaying (or) visiting order of nodes in a binary tree is called as Binary Tree Traversal.

-

There are three types of binary tree traversals.

-

In - Order Traversal

-

Pre - Order Traversal

-

Post - Order Traversal

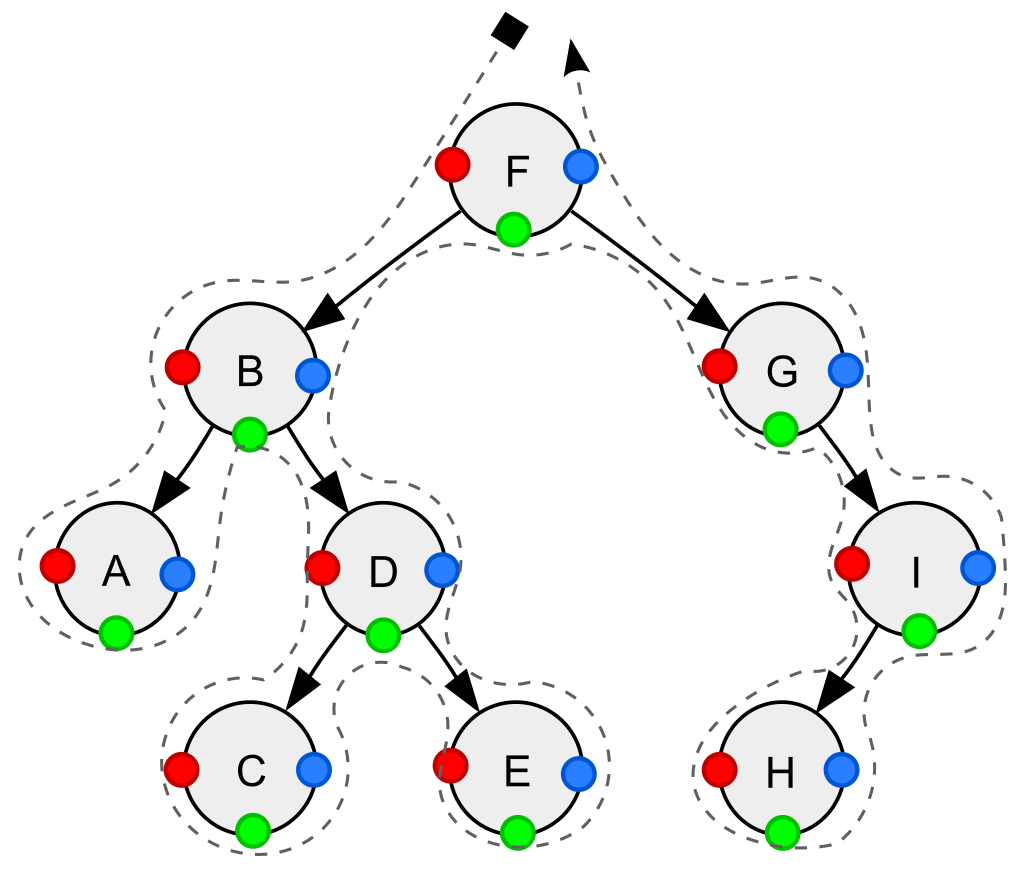

Consider the following binary tree for

ref : Tree traversal - Wikipedia

Depth-first traversal (dotted path) of a binary tree:

- Pre-order (node visited at position red): F, B, A, D, C, E, G, I, H;

- In-order (node visited at position green): A, B, C, D, E, F, G, H, I;

- Post-order (node visited at position blue): A, C, E, D, B, H, I, G, F.

Notations We Will Use For Orders¶

NLR : Node Left Right

LRN : Left Right Node

LNR : Left Node Right

Pre-order, NLR¶

- Visit the current node (in the figure: position red).

- Recursively traverse the current node's left subtree.

- Recursively traverse the current node's right subtree.

The pre-order traversal is a topologically sorted one, because a parent node is processed before any of its child nodes is done.

Post-order, LRN¶

- Recursively traverse the current node's left subtree.

- Recursively traverse the current node's right subtree.

- Visit the current node (in the figure: position blue).

Post-order traversal can be useful to get postfix expression of a binary expression tree.

In-order, LNR¶

- Recursively traverse the current node's left subtree.

- Visit the current node (in the figure: position green).

- Recursively traverse the current node's right subtree.

-

In a binary search tree ordered such that in each node the key is greater than all keys in its left subtree and less than all keys in its right subtree,

-

in-order traversal retrieves the keys in ascending sorted order.[7]

Reverse pre-order, NRL¶

- Visit the current node.

- Recursively traverse the current node's right subtree.

- Recursively traverse the current node's left subtree.

Reverse post-order, RLN¶

- Recursively traverse the current node's right subtree.

- Recursively traverse the current node's left subtree.

- Visit the current node.

Reverse in-order, RNL¶

- Recursively traverse the current node's right subtree.

- Visit the current node.

- Recursively traverse the current node's left subtree.

-

In a binary search tree ordered such that in each node the key is greater than all keys in its left subtree and less than all keys in its right subtree,

-

reverse in-order traversal retrieves the keys in descending sorted order.

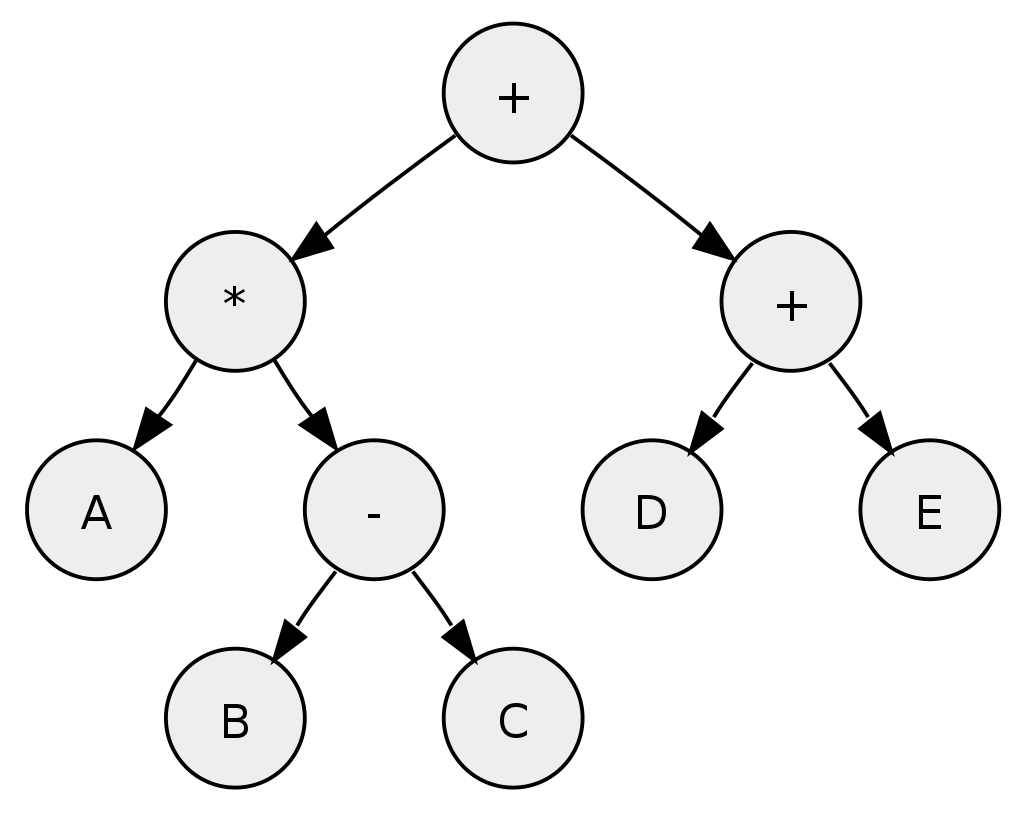

Applications for Pre-Order¶

-

Pre-order traversal can be used to make a prefix expression (Polish notation) from expression trees: traverse the expression tree pre-orderly.

-

For example, traversing the depicted arithmetic expression in pre-order yields "+ * A − B C + D E".

-

In prefix notation, no need any parentheses as long as each operator has a fixed number of operands.

-

Preorder traversal is also used to create a copy of the tree.

Tree representing the arithmetic expression: A * (B − C) + (D + E)

-

Post-order traversal can generate a postfix representation (Reverse Polish notation) of a binary tree.

-

Traversing the depicted arithmetic expression in post-order yields "A B C − * D E + +"; the latter can easily be transformed into machine code to evaluate the expression by a stack machine.

-

Postorder traversal is also used to delete the tree.

-

Each node is freed after freeing its children.

Pre-order implementation Recursive¶

Pre-order implementation Iterative¶

procedure iterativePreorder(node)

if node = null

return

stack ← empty stack

stack.push(node)

while not stack.isEmpty()

node ← stack.pop()

visit(node)

// right child is pushed first so that left is processed first

if node.right ≠ null

stack.push(node.right)

if node.left ≠ null

stack.push(node.left)

Post-order implementation Recursive¶

procedure postorder(node)

if node = null

return

postorder(node.left)

postorder(node.right)

visit(node)

Post-order implementation Iterative¶

procedure iterativePostorder(node)

stack ← empty stack

lastNodeVisited ← null

while not stack.isEmpty() or node ≠ null

if node ≠ null

stack.push(node)

node ← node.left

else

peekNode ← stack.peek()

// if right child exists and traversing node

// from left child, then move right

if peekNode.right ≠ null and lastNodeVisited ≠ peekNode.right

node ← peekNode.right

else

visit(peekNode)

lastNodeVisited ← stack.pop()

In-order implementation Recursive¶

In-order implementation Iterative¶

procedure iterativeInorder(node)

stack ← empty stack

while not stack.isEmpty() or node ≠ null

if node ≠ null

stack.push(node)

node ← node.left

else

node ← stack.pop()

visit(node)

node ← node.right

Binary Tree Traversal in C¶

// Tree traversal in C

#include <stdio.h>

#include <stdlib.h>

struct node {

int item;

struct node* left;

struct node* right;

};

// Inorder traversal

void inorderTraversal(struct node* root) {

if (root == NULL) return;

inorderTraversal(root->left);

printf("%d ->", root->item);

inorderTraversal(root->right);

}

// preorderTraversal traversal

void preorderTraversal(struct node* root) {

if (root == NULL) return;

printf("%d ->", root->item);

preorderTraversal(root->left);

preorderTraversal(root->right);

}

// postorderTraversal traversal

void postorderTraversal(struct node* root) {

if (root == NULL) return;

postorderTraversal(root->left);

postorderTraversal(root->right);

printf("%d ->", root->item);

}

// Create a new Node

struct node* createNode(value) {

struct node* newNode = malloc(sizeof(struct node));

newNode->item = value;

newNode->left = NULL;

newNode->right = NULL;

return newNode;

}

// Insert on the left of the node

struct node* insertLeft(struct node* root, int value) {

root->left = createNode(value);

return root->left;

}

// Insert on the right of the node

struct node* insertRight(struct node* root, int value) {

root->right = createNode(value);

return root->right;

}

int main() {

struct node* root = createNode(1);

insertLeft(root, 12);

insertRight(root, 9);

insertLeft(root->left, 5);

insertRight(root->left, 6);

printf("Inorder traversal \n");

inorderTraversal(root);

printf("\nPreorder traversal \n");

preorderTraversal(root);

printf("\nPostorder traversal \n");

postorderTraversal(root);

}

Binary Tree Traversal in C++¶

// Tree traversal in C++

#include <iostream>

using namespace std;

struct Node {

int data;

struct Node *left, *right;

Node(int data) {

this->data = data;

left = right = NULL;

}

};

// Preorder traversal

void preorderTraversal(struct Node* node) {

if (node == NULL)

return;

cout << node->data << "->";

preorderTraversal(node->left);

preorderTraversal(node->right);

}

// Postorder traversal

void postorderTraversal(struct Node* node) {

if (node == NULL)

return;

postorderTraversal(node->left);

postorderTraversal(node->right);

cout << node->data << "->";

}

// Inorder traversal

void inorderTraversal(struct Node* node) {

if (node == NULL)

return;

inorderTraversal(node->left);

cout << node->data << "->";

inorderTraversal(node->right);

}

int main() {

struct Node* root = new Node(1);

root->left = new Node(12);

root->right = new Node(9);

root->left->left = new Node(5);

root->left->right = new Node(6);

cout << "Inorder traversal ";

inorderTraversal(root);

cout << "\nPreorder traversal ";

preorderTraversal(root);

cout << "\nPostorder traversal ";

postorderTraversal(root);

Binary Tree Traversal in Java¶

// Tree traversal in Java

class Node {

int item;

Node left, right;

public Node(int key) {

item = key;

left = right = null;

}

}

class BinaryTree {

// Root of Binary Tree

Node root;

BinaryTree() {

root = null;

}

void postorder(Node node) {

if (node == null)

return;

// Traverse left

postorder(node.left);

// Traverse right

postorder(node.right);

// Traverse root

System.out.print(node.item + "->");

}

void inorder(Node node) {

if (node == null)

return;

// Traverse left

inorder(node.left);

// Traverse root

System.out.print(node.item + "->");

// Traverse right

inorder(node.right);

}

void preorder(Node node) {

if (node == null)

return;

// Traverse root

System.out.print(node.item + "->");

// Traverse left

preorder(node.left);

// Traverse right

preorder(node.right);

}

public static void main(String[] args) {

BinaryTree tree = new BinaryTree();

tree.root = new Node(1);

tree.root.left = new Node(12);

tree.root.right = new Node(9);

tree.root.left.left = new Node(5);

tree.root.left.right = new Node(6);

System.out.println("Inorder traversal");

tree.inorder(tree.root);

System.out.println("\nPreorder traversal ");

tree.preorder(tree.root);

System.out.println("\nPostorder traversal");

tree.postorder(tree.root);

}

}

Review¶

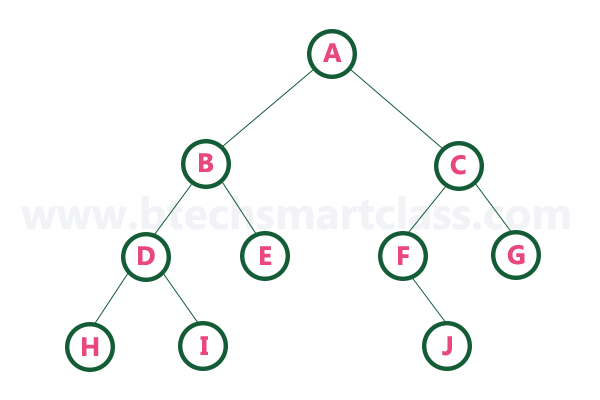

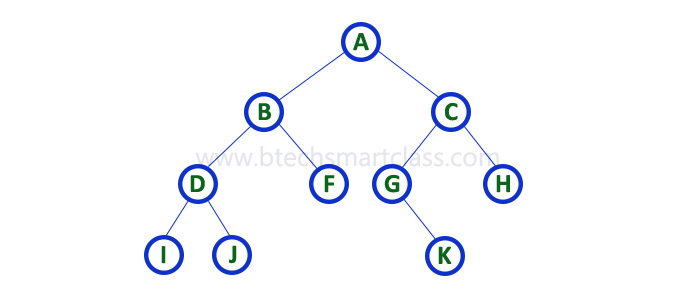

Consider the following binary tree.

1. In - Order Traversal ( leftChild - root - rightChild )¶

-

In In-Order traversal,

-

the root node is visited between the left child and right child.

-

In this traversal,

-

the left child node is visited first,

-

then the root node is visited and

-

later we go for visiting the right child node.

-

This in-order traversal is applicable for every root node of all subtrees in the tree. This is performed recursively for all nodes in the tree.

-

In the above example of a binary tree,

-

first we try to visit left child of root node 'A',

-

but A's left child 'B' is a root node for left subtree.

-

so we try to visit its (B's) left child 'D' and

-

again D is a root for subtree with nodes D, I and J.

-

So we try to visit its left child 'I' and it is the leftmost child.

-

So first we visit 'I' then go for its root node 'D' and later we visit D's right child 'J'.

-

With this we have completed the left part of node B.

-

Then visit 'B' and next B's right child 'F' is visited.

-

With this we have completed left part of node A.

-

Then visit root node 'A'. With this we have completed left and root parts of node A.

-

Then we go for the right part of the node A. In right of A again there is a subtree with root C. So go for left child of C and again it is a subtree with root G.

-

But G does not have left part so we visit 'G' and then visit G's right child K.

-

With this we have completed the left part of node C.

-

Then visit root node 'C' and next visit C's right child 'H' which is the rightmost child in the tree. So we stop the process.

- That means here we have visited in the order of I - D - J - B - F - A - G - K - C - H using In-Order Traversal.

In-Order Traversal for above example of binary tree is

I - D - J - B - F - A - G - K - C - H¶

2. Pre - Order Traversal ( root - leftChild - rightChild )¶

-

In Pre-Order traversal,

-

the root node is visited before the left child and right child nodes.

-

In this traversal,

-

the root node is visited first,

-

then its left child and

-

later its right child.

-

This pre-order traversal is applicable for every root node of all subtrees in the tree.

-

In the above example of binary tree,

-

first we visit root node 'A' then visit its left child 'B' which is a root for D and F.

-

So we visit B's left child 'D' and again D is a root for I and J.

-

So we visit D's left child 'I' which is the leftmost child.

-

So next we go for visiting D's right child 'J'.

-

With this we have completed root,

-

left and right parts of node D and root,

-

left parts of node B.

-

Next visit B's right child 'F'.

-

With this we have completed root and left parts of node A.

-

So we go for A's right child 'C' which is a root node for G and H.

-

After visiting C, we go for its left child 'G' which is a root for node K.

-

So next we visit left of G, but it does not have left child so we go for G's right child 'K'. With this, we have completed node C's root and left parts.

-

Next visit C's right child 'H' which is the rightmost child in the tree. So we stop the process.

-

That means here we have visited in the order of A-B-D-I-J-F-C-G-K-H using Pre-Order Traversal.

-

Pre-Order Traversal for above example binary tree is

-

A - B - D - I - J - F - C - G - K - H

3. Post - Order Traversal ( leftChild - rightChild - root )¶

-

In Post-Order traversal,

-

the root node is visited after left child and right child.

-

In this traversal,

-

left child node is visited first,

-

then its right child and

-

then its root node.

-

This is recursively performed until the right most node is visited.

-

Here we have visited in the order of I - J - D - F - B - K - G - H - C - A using Post-Order Traversal.

-

Post-Order Traversal for above example binary tree is

-

I - J - D - F - B - K - G - H - C - A

Program to Create Binary Tree and display using In-Order Traversal - C Programming¶

#include<stdio.h>

#include<conio.h>

struct Node{

int data;

struct Node *left;

struct Node *right;

};

struct Node *root = NULL;

int count = 0;

struct Node* insert(struct Node*, int);

void display(struct Node*);

void main(){

int choice, value;

clrscr();

printf("\n----- Binary Tree -----\n");

while(1){

printf("\n***** MENU *****\n");

printf("1. Insert\n2. Display\n3. Exit");

printf("\nEnter your choice: ");

scanf("%d",&choice);

switch(choice){

case 1: printf("\nEnter the value to be insert: ");

scanf("%d", &value);

root = insert(root,value);

break;

case 2: display(root); break;

case 3: exit(0);

default: printf("\nPlease select correct operations!!!\n");

}

}

}

struct Node* insert(struct Node *root,int value){

struct Node *newNode;

newNode = (struct Node*)malloc(sizeof(struct Node));

newNode->data = value;

if(root == NULL){

newNode->left = newNode->right = NULL;

root = newNode;

count++;

}

else{

if(count%2 != 0)

root->left = insert(root->left,value);

else

root->right = insert(root->right,value);

}

return root;

}

// display is performed by using Inorder Traversal

void display(struct Node *root)

{

if(root != NULL){

display(root->left);

printf("%d\t",root->data);

display(root->right);

}

}

Output¶

Output¶

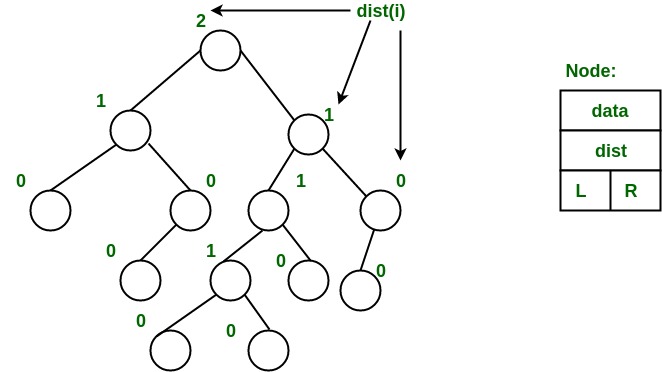

Threaded Binary Trees¶

Threaded Binary Trees¶

-

A binary tree can be represented using array representation or linked list representation.

-

When a binary tree is represented using linked list representation, the reference part of the node which doesn't have a child is filled with a NULL pointer.

-

In any binary tree linked list representation, there is a number of NULL pointers than actual pointers.

-

Generally, in any binary tree linked list representation, if there are 2N number of reference fields, then N+1 number of reference fields are filled with NULL ( N+1 are NULL out of 2N ).

-

This NULL pointer does not play any role except indicating that there is no link (no child).

-

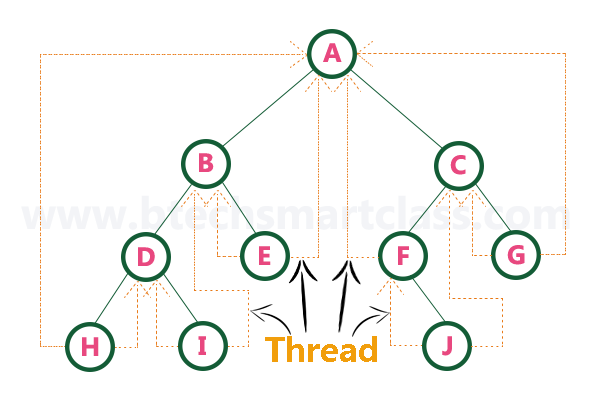

A. J. Perlis and C. Thornton have proposed new binary tree called "Threaded Binary Tree", which makes use of NULL pointers to improve its traversal process.

-

In a threaded binary tree, NULL pointers are replaced by references of other nodes in the tree. These extra references are called as threads.

-

Threaded Binary Tree is also a binary tree in which all left child pointers that are NULL (in Linked list representation) points to its in-order predecessor, and all right child pointers that are NULL (in Linked list representation) points to its in-order successor.

- If there is no in-order predecessor or in-order successor, then it points to the root node.

Consider the following binary tree.

- To convert the above example binary tree into a threaded binary tree, first find the in-order traversal of that tree...

In-order traversal of above binary tree...

H - D - I - B - E - A - F - J - C - G¶

-

When we represent the above binary tree using linked list representation, nodes H, I, E, F, J and G left child pointers are NULL.

-

This NULL is replaced by address of its in-order predecessor respectively (I to D, E to B, F to A, J to F and G to C), but here the node H does not have its in-order predecessor, so it points to the root node A.

-

And nodes H, I, E, J and G right child pointers are NULL.

-

These NULL pointers are replaced by address of its in-order successor respectively (H to D, I to B, E to A, and J to C), but here the node G does not have its in-order successor, so it points to the root node A.

- Above example binary tree is converted into threaded binary tree as follows.

- In the above figure, threads are indicated with dotted links.

Heaps (Max, Min, Binary , Binomial, Fibonacci, Leftist, K-ary) and Priority Queue¶

Heap Data Structure¶

Max-Heap¶

- Data Structures Tutorials - Max Heap with an exaple

- CE100 Algorithms and Programming II - RTEU CE100 Algorithms and Programming-II Course Notes

Max Priority Queue¶

-

Course Notes

-

CE100 Algorithms and Programming II - RTEU CE100 Algorithms and Programming-II Course Notes

-

Btech Smart Class

-

http://www.btechsmartclass.com/data_structures/max-priority-queue.html

-

William Fiset

-

https://www.youtube.com/watch?v=wptevk0bshY&t=0s&ab_channel=WilliamFiset

- https://github.com/williamfiset/Algorithms/tree/master/src/main/java/com/williamfiset/algorithms/datastructures/priorityqueue

Max Priority Queue with Heap¶

-

Please follow the link below for Heap and Max-Priority

-

CE100 Algorithms and Programming II - RTEU CE100 Algorithms and Programming-II Course Notes

Max Priority Queue in C¶

// Priority Queue implementation in C

#include <stdio.h>

int size = 0;

void swap(int *a, int *b) {

int temp = *b;

*b = *a;

*a = temp;

}

// Function to heapify the tree

void heapify(int array[], int size, int i) {

if (size == 1) {

printf("Single element in the heap");

} else {

// Find the largest among root, left child and right child

int largest = i;

int l = 2 * i + 1;

int r = 2 * i + 2;

if (l < size && array[l] > array[largest])

largest = l;

if (r < size && array[r] > array[largest])

largest = r;

// Swap and continue heapifying if root is not largest

if (largest != i) {

swap(&array[i], &array[largest]);

heapify(array, size, largest);

}

}

}

// Function to insert an element into the tree

void insert(int array[], int newNum) {

if (size == 0) {

array[0] = newNum;

size += 1;

} else {

array[size] = newNum;

size += 1;

for (int i = size / 2 - 1; i >= 0; i--) {

heapify(array, size, i);

}

}

}

// Function to delete an element from the tree

void deleteRoot(int array[], int num) {

int i;

for (i = 0; i < size; i++) {

if (num == array[i])

break;

}

swap(&array[i], &array[size - 1]);

size -= 1;

for (int i = size / 2 - 1; i >= 0; i--) {

heapify(array, size, i);

}

}

// Print the array

void printArray(int array[], int size) {

for (int i = 0; i < size; ++i)

printf("%d ", array[i]);

printf("\n");

}

// Driver code

int main() {

int array[10];

insert(array, 3);

insert(array, 4);

insert(array, 9);

insert(array, 5);

insert(array, 2);

printf("Max-Heap array: ");

printArray(array, size);

deleteRoot(array, 4);

printf("After deleting an element: ");

printArray(array, size);

}

Max Priority Queue in C++¶

// Priority Queue implementation in C++

#include <iostream>

#include <vector>

using namespace std;

// Function to swap position of two elements

void swap(int *a, int *b) {

int temp = *b;

*b = *a;

*a = temp;

}

// Function to heapify the tree

void heapify(vector<int> &hT, int i) {

int size = hT.size();

// Find the largest among root, left child and right child

int largest = i;

int l = 2 * i + 1;

int r = 2 * i + 2;

if (l < size && hT[l] > hT[largest])

largest = l;

if (r < size && hT[r] > hT[largest])

largest = r;

// Swap and continue heapifying if root is not largest

if (largest != i) {

swap(&hT[i], &hT[largest]);

heapify(hT, largest);

}

}

// Function to insert an element into the tree

void insert(vector<int> &hT, int newNum) {

int size = hT.size();

if (size == 0) {

hT.push_back(newNum);

} else {

hT.push_back(newNum);

for (int i = size / 2 - 1; i >= 0; i--) {

heapify(hT, i);

}

}

}

// Function to delete an element from the tree

void deleteNode(vector<int> &hT, int num) {

int size = hT.size();

int i;

for (i = 0; i < size; i++) {

if (num == hT[i])

break;

}

swap(&hT[i], &hT[size - 1]);

hT.pop_back();

for (int i = size / 2 - 1; i >= 0; i--) {

heapify(hT, i);

}

}

// Print the tree

void printArray(vector<int> &hT) {

for (int i = 0; i < hT.size(); ++i)

cout << hT[i] << " ";

cout << "\n";

}

// Driver code

int main() {

vector<int> heapTree;

insert(heapTree, 3);

insert(heapTree, 4);

insert(heapTree, 9);

insert(heapTree, 5);

insert(heapTree, 2);

cout << "Max-Heap array: ";

printArray(heapTree);

deleteNode(heapTree, 4);

cout << "After deleting an element: ";

printArray(heapTree);

}

Max Priority Queue in Java¶

// Priority Queue implementation in Java

import java.util.ArrayList;

class Heap {

// Function to heapify the tree

void heapify(ArrayList<Integer> hT, int i) {

int size = hT.size();

// Find the largest among root, left child and right child

int largest = i;

int l = 2 * i + 1;

int r = 2 * i + 2;

if (l < size && hT.get(l) > hT.get(largest))

largest = l;

if (r < size && hT.get(r) > hT.get(largest))

largest = r;

// Swap and continue heapifying if root is not largest

if (largest != i) {

int temp = hT.get(largest);

hT.set(largest, hT.get(i));

hT.set(i, temp);

heapify(hT, largest);

}

}

// Function to insert an element into the tree

void insert(ArrayList<Integer> hT, int newNum) {

int size = hT.size();

if (size == 0) {

hT.add(newNum);

} else {

hT.add(newNum);

for (int i = size / 2 - 1; i >= 0; i--) {

heapify(hT, i);

}

}

}

// Function to delete an element from the tree

void deleteNode(ArrayList<Integer> hT, int num) {

int size = hT.size();

int i;

for (i = 0; i < size; i++) {

if (num == hT.get(i))

break;

}

int temp = hT.get(i);

hT.set(i, hT.get(size - 1));

hT.set(size - 1, temp);

hT.remove(size - 1);

for (int j = size / 2 - 1; j >= 0; j--) {

heapify(hT, j);

}

}

// Print the tree

void printArray(ArrayList<Integer> array, int size) {

for (Integer i : array) {

System.out.print(i + " ");

}

System.out.println();

}

// Driver code

public static void main(String args[]) {

ArrayList<Integer> array = new ArrayList<Integer>();

int size = array.size();

Heap h = new Heap();

h.insert(array, 3);

h.insert(array, 4);

h.insert(array, 9);

h.insert(array, 5);

h.insert(array, 2);

System.out.println("Max-Heap array: ");

h.printArray(array, size);

h.deleteNode(array, 4);

System.out.println("After deleting an element: ");

h.printArray(array, size);

}

}

Max Priority Queue with Array¶

-

In the normal queue data structure,

-

insertion is performed at the end of the queue and deletion is performed based on the FIFO principle.

-

This queue implementation may not be suitable for all applications.

-

Consider a networking application where the server has to respond for requests from multiple clients using queue data structure.

- Assume four requests arrived at the queue in the order of R1, R2, R3 & R4 where R1 requires 20 units of time, R2 requires 2 units of time, R3 requires 10 units of time and R4 requires 5 units of time. A queue is as follows.

Now, check to wait time of each request that to be completed.

- R1 : 20 units of time

- R2 : 22 units of time (R2 must wait until R1 completes 20 units and R2 itself requires 2 units. Total 22 units)

- R3 : 32 units of time (R3 must wait until R2 completes 22 units and R3 itself requires 10 units. Total 32 units)

- R4 : 37 units of time (R4 must wait until R3 completes 35 units and R4 itself requires 5 units. Total 37 units)

****Here, the average waiting time for all requests (R1, R2, R3 and R4) is (20+22+32+37)/4 ≈ 27 units of time.**

-

That means, if we use a normal queue data structure to serve these requests the average waiting time for each request is 27 units of time.

-

Now, consider another way of serving these requests.

-

If we serve according to their required amount of time, first we serve R2 which has minimum time (2 units) requirement.

-

Then serve R4 which has second minimum time (5 units) requirement and then serve R3 which has third minimum time (10 units) requirement and finally R1 is served which has maximum time (20 units) requirement.

Now, check to wait time of each request that to be completed.

- R2 : 2 units of time

- R4 : 7 units of time (R4 must wait until R2 completes 2 units and R4 itself requires 5 units. Total 7 units)

- R3 : 17 units of time (R3 must wait until R4 completes 7 units and R3 itself requires 10 units. Total 17 units)

- R1 : 37 units of time (R1 must wait until R3 completes 17 units and R1 itself requires 20 units. Total 37 units)

****Here, the average waiting time for all requests (R1, R2, R3 and R4) is (2+7+17+37)/4 ≈ 15 units of time.**

-

From the above two situations, it is very clear that the second method server can complete all four requests with very less time compared to the first method.

-

This is what exactly done by the priority queue.

-

Priority queue is a variant of a queue data structure in which insertion is performed in the order of arrival and deletion is performed based on the priority.

-

There are two types of priority queues they are as follows.

-

Max Priority Queue

-

Min Priority Queue

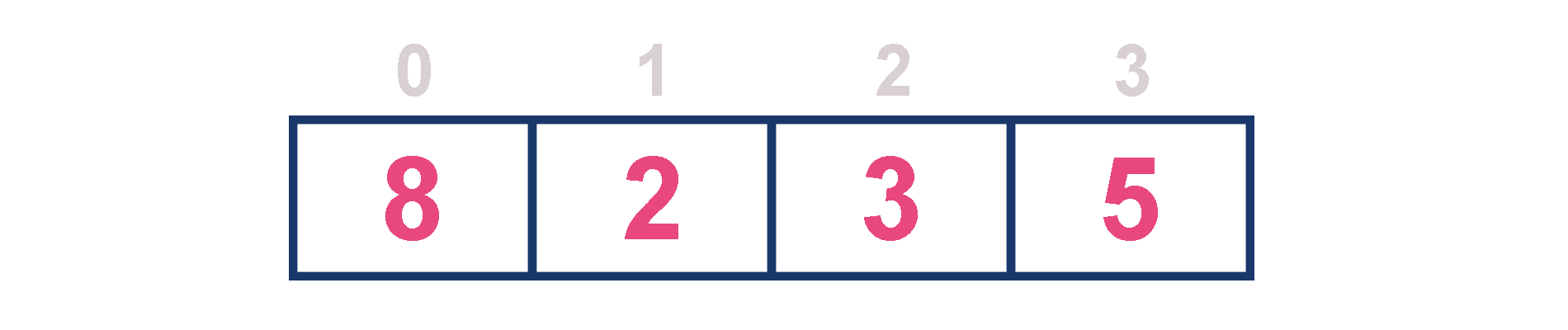

1. Max Priority Queue¶

-

In a max priority queue, elements are inserted in the order in which they arrive the queue and the maximum value is always removed first from the queue.

-

For example, assume that we insert in the order 8, 3, 2 & 5 and they are removed in the order 8, 5, 3, 2.

-

The following are the operations performed in a Max priority queue...

-

isEmpty() - Check whether queue is Empty.

-

insert() - Inserts a new value into the queue.

-

findMax() - Find maximum value in the queue.

-

remove() - Delete maximum value from the queue.

Max Priority Queue Representations¶

-

There are 6 representations of max priority queue.

-

Using an Unordered Array (Dynamic Array)

-

Using an Unordered Array (Dynamic Array) with the index of the maximum value

-

Using an Array (Dynamic Array) in Decreasing Order

-

Using an Array (Dynamic Array) in Increasing Order

-

Using Linked List in Increasing Order

-

Using Unordered Linked List with reference to node with the maximum value

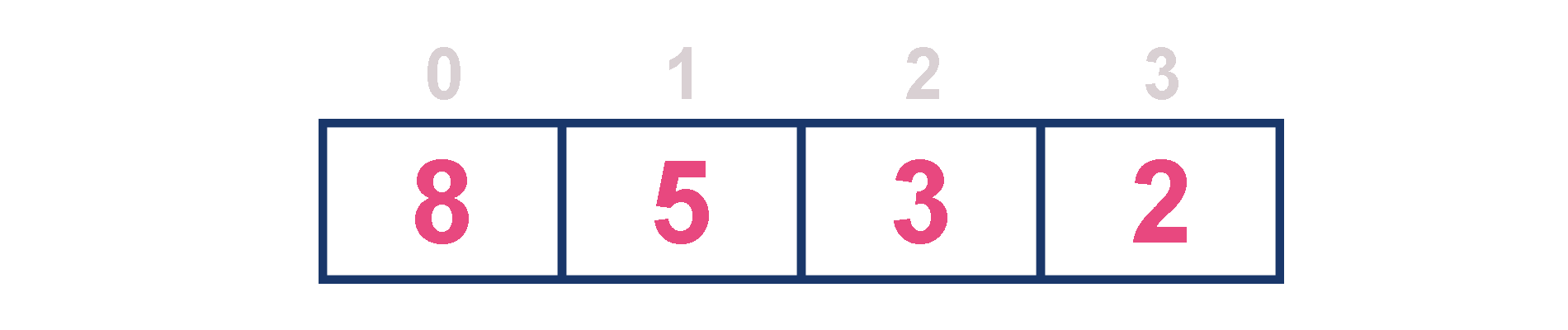

1. Using an Unordered Array (Dynamic Array)¶

-

In this representation, elements are inserted according to their arrival order and the largest element is deleted first from the max priority queue.

-

For example, assume that elements are inserted in the order of 8, 2, 3 and 5. And they are removed in the order 8, 5, 3 and 2.

-

Now, let us analyze each operation according to this representation.

-

isEmpty() - If 'front == -1' queue is Empty. This operation requires O(1) time complexity which means constant time complexity.

-

insert() - New element is added at the end of the queue. This operation requires O(1) time complexity which means constant time complexity.

-

findMax() - To find the maximum element in the queue, we need to compare it with all the elements in the queue. This operation requires O(n) time complexity.

-

remove() - To remove an element from the max priority queue, first we need to find the largest element using findMax() which requires O(n) time complexity, then that element is deleted with constant time complexity O(1). The remove() operation requires O(n) + O(1) ≈ O(n) time complexity.

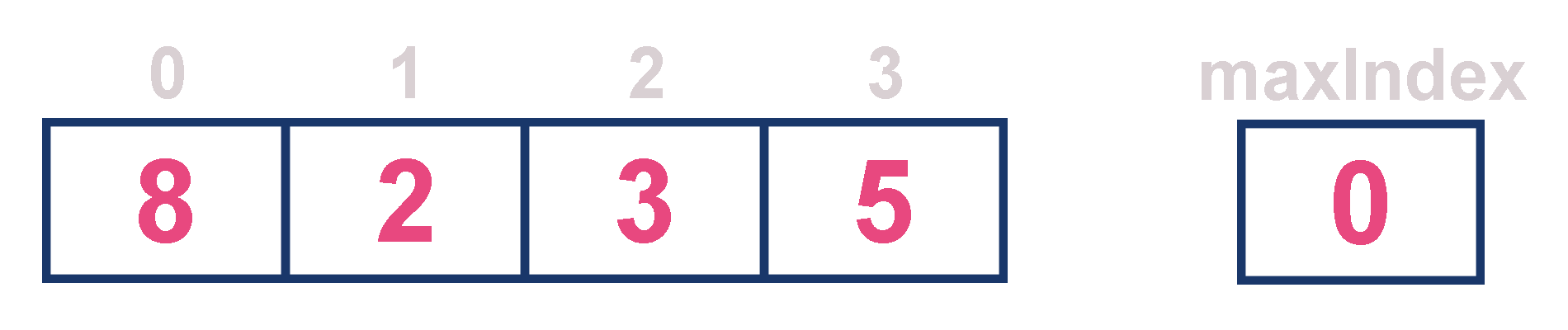

2. Using an Unordered Array (Dynamic Array) with the index of the maximum value¶

-

In this representation, elements are inserted according to their arrival order and the largest element is deleted first from max priority queue.

-

For example, assume that elements are inserted in the order of 8, 2, 3 and 5. And they are removed in the order 8, 5, 3 and 2.

-

Now, let us analyze each operation according to this representation.

-

isEmpty() - If 'front == -1' queue is Empty. This operation requires O(1) time complexity which means constant time complexity.

-

insert() - New element is added at the end of the queue with O(1) time complexity and for each insertion we need to update maxIndex with O(1) time complexity. This operation requires O(1) time complexity which means constant time complexity.

-

findMax() - Finding the maximum element in the queue is very simple because index of the maximum element is stored in maxIndex. This operation requires O(1) time complexity.

-

remove() - To remove an element from the queue, first we need to find the largest element using findMax() which requires O(1) time complexity, then that element is deleted with constant time complexity O(1) and finally we need to update the next largest element index value in maxIndex which requires O(n) time complexity. The remove() operation requires O(1)+O(1)+O(n) ≈ O(n) time complexity.

3. Using an Array (Dynamic Array) in Decreasing Order¶

-

In this representation, elements are inserted according to their value in decreasing order and largest element is deleted first from max priority queue.

-

For example, assume that elements are inserted in the order of 8, 5, 3 and 2. And they are removed in the order 8, 5, 3 and 2.

-

Now, let us analyze each operation according to this representation...

-

isEmpty() - If 'front == -1' queue is Empty. This operation requires O(1) time complexity which means constant time complexity.

-

insert() - New element is added at a particular position based on the decreasing order of elements which requires O(n) time complexity as it needs to shift existing elements inorder to insert new element in decreasing order. This insert() operation requires O(n) time complexity.

-

findMax() - Finding the maximum element in the queue is very simple because maximum element is at the beginning of the queue. This findMax() operation requires O(1) time complexity.

-

remove() - To remove an element from the max priority queue, first we need to find the largest element using findMax() operation which requires O(1) time complexity, then that element is deleted with constant time complexity O(1) and finally we need to rearrange the remaining elements in the list which requires O(n) time complexity. This remove() operation requires O(1) + O(1) + O(n) ≈ O(n) time complexity.

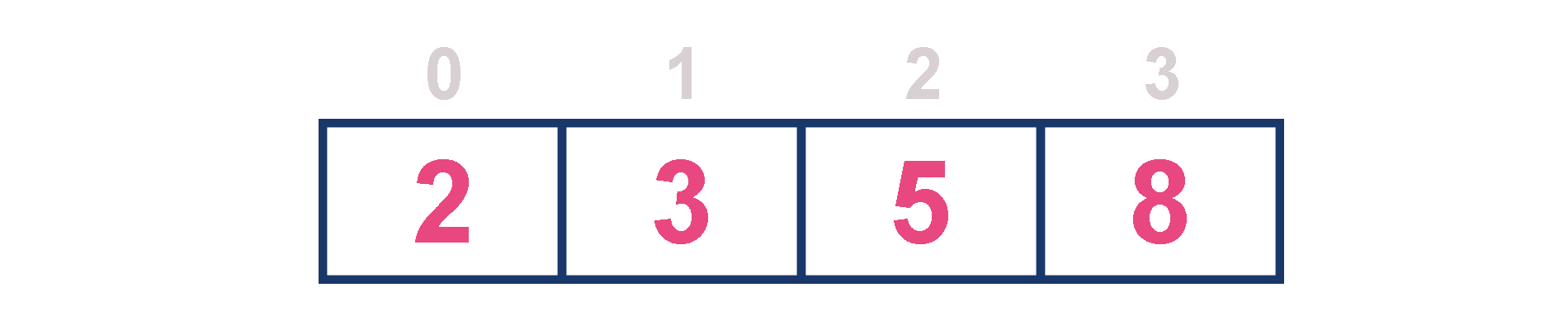

4. Using an Array (Dynamic Array) in Increasing Order¶

-

In this representation, elements are inserted according to their value in increasing order and maximum element is deleted first from max priority queue.

-

For example, assume that elements are inserted in the order of 2, 3, 5 and 8. And they are removed in the order 8, 5, 3 and 2.

-

Now, let us analyze each operation according to this representation...

-

isEmpty() - If 'front == -1' queue is Empty. This operation requires O(1) time complexity which means constant time complexity.

-

insert() - New element is added at a particular position in the increasing order of elements into the queue which requires O(n) time complexity as it needs to shift existing elements to maintain increasing order of elements. This insert() operation requires O(n) time complexity.

-

findMax() - Finding the maximum element in the queue is very simple becuase maximum element is at the end of the queue. This findMax() operation requires O(1) time complexity.

-

remove() - To remove an element from the queue first we need to find the largest element using findMax() which requires O(1) time complexity, then that element is deleted with constant time complexity O(1). Finally, we need to rearrange the remaining elements to maintain increasing order of elements which requires O(n) time complexity. This remove() operation requires O(1) + O(1) + O(n) ≈ O(n) time complexity.

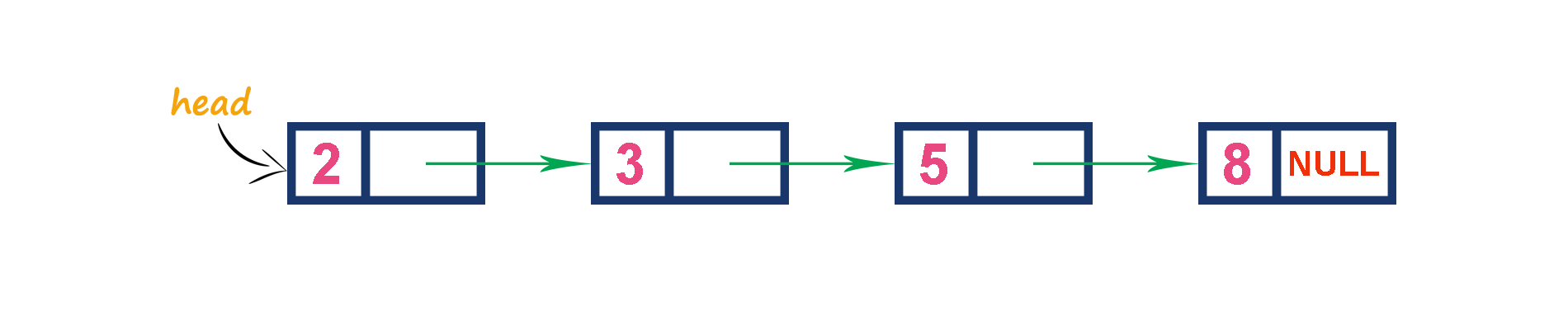

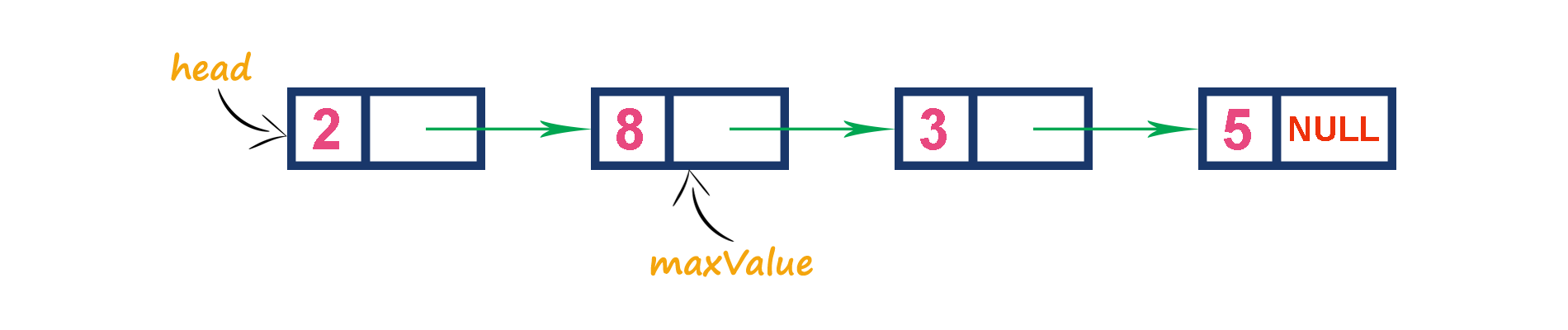

5. Using Linked List in Increasing Order¶

-

In this representation, we use a single linked list to represent max priority queue. In this representation, elements are inserted according to their value in increasing order and a node with the maximum value is deleted first from the max priority queue.

-

For example, assume that elements are inserted in the order of 2, 3, 5 and 8. And they are removed in the order of 8, 5, 3 and 2.

-

Now, let us analyze each operation according to this representation...

-

isEmpty() - If 'head == NULL' queue is Empty. This operation requires O(1) time complexity which means constant time complexity.

-

insert() - New element is added at a particular position in the increasing order of elements which requires O(n) time complexity. This insert() operation requires O(n) time complexity.

-